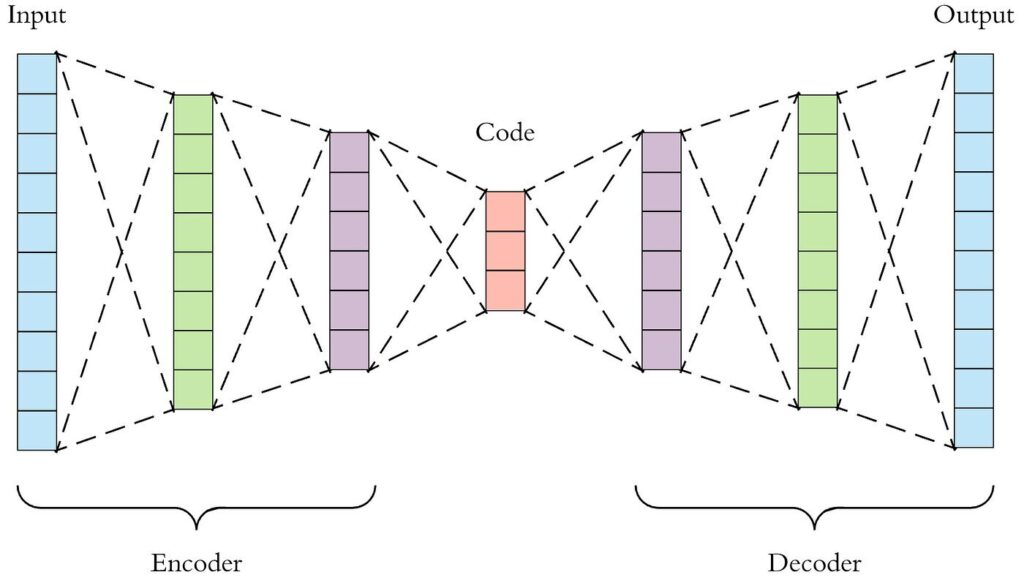

Autoencoders are a type of artificial neural network designed for unsupervised learning, primarily used for tasks such as dimensionality reduction, data denoising, and anomaly detection. They consist of two main components: the encoder and the decoder.

Structure of Autoencoders

-

Encoder: This part compresses the input data into a lower-dimensional representation, known as the latent space. The encoder effectively reduces the dimensionality of the input while preserving its essential features.

-

Decoder: The decoder takes the compressed representation from the encoder and reconstructs the original input data. The goal is to minimize the difference between the input and the reconstructed output, often measured by a loss function such as mean squared error or binary cross-entropy.

The architecture of an autoencoder can be visualized as follows:

Input → Encoder → Latent Space → Decoder → Output

Training Process

Autoencoders are trained using a process called backpropagation, where the model learns to minimize the reconstruction error. This iterative process involves adjusting the weights of the neural network to improve the accuracy of the output. The presence of a bottleneck in the architecture forces the model to learn a compressed representation of the data, which can reveal underlying patterns and correlations.

Applications of Autoencoders

Autoencoders have a wide range of applications, including:

-

Dimensionality Reduction: They can serve as a more flexible alternative to traditional techniques like Principal Component Analysis (PCA), allowing for non-linear transformations.

-

Image Compression and Denoising: Autoencoders can compress images while maintaining important features, and they are also effective in removing noise from images.

-

Anomaly Detection: By learning the normal patterns in data, autoencoders can identify anomalies or outliers, making them useful in fraud detection and network security.

-

Data Generation: Variational Autoencoders (VAEs) are a variant that can generate new data samples similar to the training data, contributing to fields like generative modeling and creative AI applications.

Conclusion

In summary, autoencoders are powerful tools in the realm of deep learning, enabling efficient data representation and transformation. Their ability to learn from unlabeled data makes them particularly valuable in various domains, from image processing to anomaly detection and beyond. As research continues, autoencoders are likely to evolve, leading to even more innovative applications in artificial intelligence and machine learning.

Autoencoders are versatile and flexible, making them a critical component in the toolkit of machine learning practitioners[1][2][4][5].

Further Reading

1. [2201.03898] An Introduction to Autoencoders

2. Introduction to Autoencoders: From The Basics to Advanced Applications in PyTorch | DataCamp

3. 自编码器简介 | TensorFlow Core

4. Introduction To Autoencoders. A Brief Overview | by Abhijit Roy | Towards Data Science

5. Introduction to autoencoders.

Description:

Dimensionality reduction and feature learning through unsupervised learning.

IoT Scenes:

Data compression, anomaly detection, and feature extraction.

Anomaly Detection: Identifying unusual patterns by reconstructing normal data.

Data Compression: Reducing the size of sensor data while retaining essential information.

Feature Extraction: Extracting relevant features from high-dimensional IoT data.

Noise Reduction: Filtering out noise from sensor data to improve accuracy.