BERT, which stands for Bidirectional Encoder Representations from Transformers, is a language model introduced by researchers at Google in October 2018. BERT represents a significant advancement in natural language processing (NLP) by pre-training deep bidirectional representations from unlabeled text, conditioning on both left and right context in all layers. This approach allows BERT to achieve state-of-the-art results on a variety of NLP tasks, including question answering and language inference, without requiring substantial task-specific architecture modifications[1][2].

Architecture

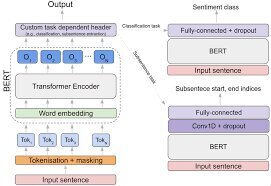

BERT employs an “encoder-only” transformer architecture, which consists of several key components:

- Tokenizer: Converts text into a sequence of tokens (integers).

- Embedding: Transforms tokens into real-valued vectors in a lower-dimensional space.

- Encoder: A stack of transformer blocks with self-attention mechanisms, but without causal masking.

- Task Head: Converts final representation vectors into one-hot encoded tokens, producing a predicted probability distribution over token types[2][3].

The model comes in two sizes:

– BERT Base: 12 transformer blocks, 12 attention heads, and 768 hidden layers.

– BERT Large: 24 transformer blocks, 16 attention heads, and 1024 hidden layers[3].

Training

BERT is pre-trained using two tasks:

1. Masked Language Modeling (MLM): Randomly masks some tokens in a sequence and trains the model to predict them based on their context.

2. Next Sentence Prediction (NSP): Trains the model to predict whether two given sentences are sequentially connected or randomly paired[2][5].

Performance

BERT’s bidirectional training enables it to understand the context of a word from both directions, leading to substantial improvements over previous models. It has achieved new state-of-the-art results on eleven NLP tasks, such as pushing the GLUE score to 80.5%, MultiNLI accuracy to 86.7%, and SQuAD v1.1 question answering Test F1 to 93.2[1][4].

Applications

BERT can be fine-tuned for various downstream tasks by adding just one additional output layer. This flexibility allows it to be applied to tasks like question answering, sentiment analysis, and named entity recognition without needing extensive modifications[1][3].

Conclusion

BERT’s innovative use of bidirectional context and transformer architecture has set a new benchmark in NLP, making it a ubiquitous baseline for many language understanding tasks. Its open-source nature and pre-trained models available on platforms like GitHub have further facilitated its widespread adoption and adaptation in the research community[2][5].

References:

1. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

2. BERT (language model) – Wikipedia

3. Understanding BERT – Towards AI

4. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding – ACL Anthology

5. What is the BERT language model? | TechTarget

Further Reading

1. [1810.04805] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

2. BERT (language model) – Wikipedia

3. Understanding BERT. BERT (Bidirectional Encoder… | by Shweta Baranwal | Towards AI

4. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding – ACL Anthology

5. What is the BERT language model? | Definition from TechTarget