In the ever-evolving field of Artificial Intelligence, Large Language Models (LLMs) have been the center of significant research, with continuous efforts being made to enhance their performance across an array of tasks. A foremost challenge in this endeavor is understanding how pre-training data influences the models’ overall capabilities. Although the value of diverse data sources and computational resources has been acknowledged, a pivotal question remains unresolved: what intrinsic properties of the data most effectively bolster general performance? Interestingly, code data has emerged as a potent component in pre-training mixtures, even for models that aren’t primarily utilized for code generation tasks. This raises pertinent questions regarding the precise impact of code data on non-code related tasks, a subject that has largely remained unexplored despite its potential to significantly advance LLM capabilities.

Recent efforts have seen researchers engage in various methods to improve LLM performance through strategic data manipulation. These methodologies include analyzing the impact of data age, quality, toxicity, and domain, alongside techniques such as filtering, de-duplication, and data pruning. Additionally, some studies have delved into utilizing synthetic data to enhance performance and bridge the gap between open-source and proprietary models. While these approaches have yielded valuable insights into general data characteristics, they have primarily overlooked the specific influence of code data on tasks outside the realm of code generation.

The practice of incorporating code into pre-training mixtures is becoming increasingly common, even for models not predominantly designed for code-related applications. Previous research underscores that code data can enhance LLM performance in various natural language processing tasks, such as entity linking, commonsense reasoning, and mathematical reasoning. Specific studies have highlighted the advantages of integrating Python code data in low-resource pre-training settings. However, these studies have generally been constrained to specific aspects or limited evaluation setups, lacking a holistic examination of code data’s impact across a broad spectrum of tasks and model scales.

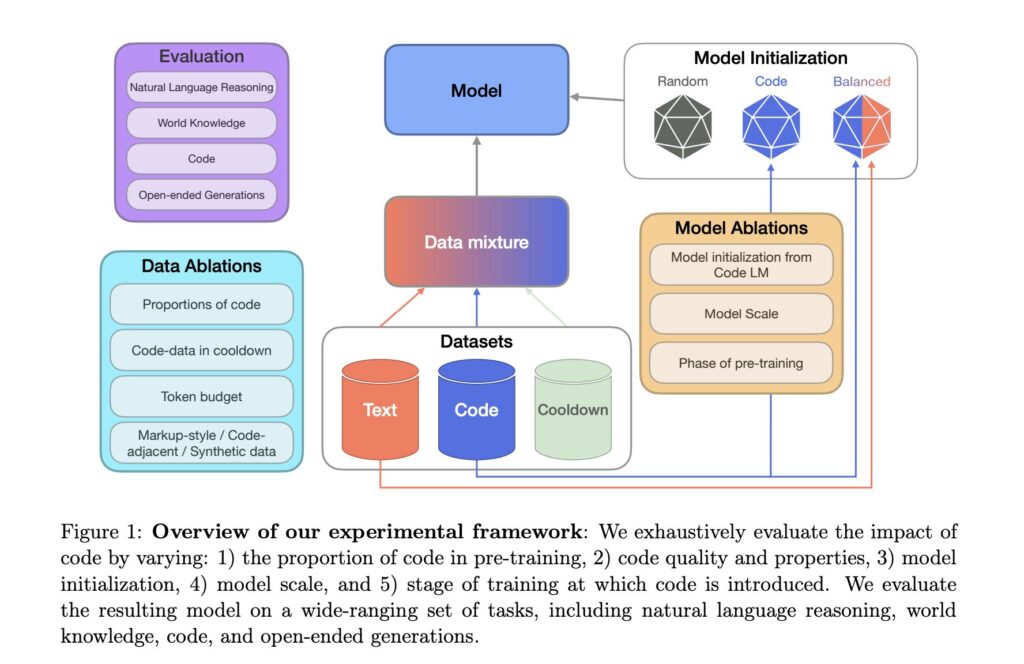

In a groundbreaking study, researchers from Cohere For AI and Cohere embarked on a series of large-scale controlled pre-training experiments to assess the impact of code data on LLM performance comprehensively. Their research delved into multiple facets, including the timing of code introduction during the training process, varying proportions of code, scaling effects, and the quality and properties of the code data utilized. Despite the substantial computational costs associated with these ablations, the outcomes consistently indicated that code data imparts notable improvements to non-code related performance.

Key findings from the study reveal that compared to text-only pre-training, the inclusion of code data led to significant relative increases of 8.2% in natural language reasoning, 4.2% in world knowledge, 6.6% in generative win rates, and an impressive 12-fold boost in code performance. Furthermore, performing cooldown with code resulted in additional enhancements: a 3.7% improvement in natural language reasoning, a 6.8% increase in world knowledge, and a 20% boost in code performance, relative to cooldown without the inclusion of code.

Several factors proved to be pivotal, including optimizing the proportion of code, enhancing code quality through the integration of synthetic code and code-adjacent data, and utilizing code during multiple training stages, including the cooldown phase. The research involved extensive evaluations encompassing a wide range of benchmarks, covering world knowledge tasks, natural language reasoning, code generation, and LLM-as-a-judge win rates. These evaluations spanned models with parameters ranging from 470 million to 2.8 billion.

The researchers employed a robust experimental framework to meticulously evaluate the impact of code on LLM performance. The study utilized SlimPajama, a high-quality text dataset, as the primary source for natural language data, systematically filtering out code-related content. For code data, the researchers utilized multiple sources to investigate different properties:

-

Web-based Code Data: Derived from the Stack dataset, focusing on the top 25 programming languages.

-

Markdown Data: Including markup-style languages like Markdown, CSS, and HTML.

-

Synthetic Code Data: A proprietary dataset featuring formally verified Python programming problems.

-

Code-Adjacent Data: Encompassing GitHub commits, Jupyter notebooks, and StackExchange threads.

The training regimen consisted of two phases: continued pre-training and cooldown. Continued pre-training involved training a model initialized from a pre-trained model for a fixed token budget. The cooldown phase aimed to further refine model quality by up-weighting high-quality datasets and gradually reducing the learning rate during the final training stages.

The evaluation suite was meticulously designed to gauge performance across various domains, including world knowledge, natural language reasoning, and code generation. Additionally, generative performance was assessed using LLM-as-a-judge win rates. This comprehensive approach facilitated a systematic understanding of the broader impact of code on LLM performance.

The study unveiled substantial impacts of code data on LLM performance across a variety of tasks. For natural language reasoning, models initialized with code data showed optimal performance. The code-initialized text model (code→text) and the balanced-initialized text model (balanced→text) outperformed the text-only baseline by 8.8% and 8.2% respectively. The balanced-only model also demonstrated a 3.2% improvement over the baseline, underscoring the positive influence of mixing code into pre-training on natural language reasoning tasks.

In world knowledge tasks, the balanced→text model delivered the best results, surpassing the code→text model by 21% and the text-only model by 4.1%, suggesting that world knowledge tasks derive benefits from a balanced data mix for initialization and a more substantial proportion of text during ongoing pre-training.

For code generation tasks, the balanced-only model topped the performance charts, with a 46.7% and 54.5% improvement over balanced→text and code→text models respectively, albeit with a trade-off in natural language task performance.

Generative quality, quantified by win rates, also saw positive growth with the inclusion of code data. Both code→text and balanced-only models surpassed the text-only variant by a margin of 6.6% in win-loss rates, indicating enhanced generative performance even on non-code evaluations.

These compelling results highlight that integrating code data in pre-training not only augments reasoning abilities but also elevates the overall quality of generated content across diverse tasks, underscoring the extensive benefits of code data in LLM training.

This extensive study offers profound insights into the role of code data in augmenting LLM performance across a wide array of tasks. The researchers executed a detailed analysis focusing not just on code-related applications but also on natural language performance and generative quality. Their methodical approach encompassed various ablations examining initialization strategies, code proportions, code quality and properties, and the significance of code in pre-training cooldown.

Key conclusions from the study demonstrate:

- Code data considerably boosts non-code task performance. The top variant with code data exhibited relative enhancements of 8.2% in natural language reasoning, 4.2% in world knowledge, and 6.6% in generative win rates compared to text-only pre-training. Code performance experienced a dramatic 12-fold increase with code data inclusion.

- Cooldown with code further augmented performance, enhancing natural language reasoning by 3.6%, world knowledge by 10.1%, and code performance by 20% compared to pre-cooldown models, alongside a 52.3% surge in generative win rates.

- The introduction of high-quality synthetic code data, even in minimal quantities, had a notably positive impact, boosting natural language reasoning by 9% and code performance by 44.9%.

These findings conclusively demonstrate that incorporating code data in LLM pre-training yields significant enhancements across diverse tasks, extending well beyond code-specific applications. The study underscores the vital role of code data in enriching LLM capabilities, providing invaluable insights for future model development and training strategies.

For those interested in a deeper dive, the published paper offers a comprehensive look at this pioneering research. Credit goes to the dedicated researchers behind this project. Stay informed by following us on Twitter, joining our Telegram Channel and LinkedIn Group, and subscribing to our newsletter. Do not miss out on joining our 49k+ Machine Learning SubReddit and check out upcoming AI webinars.