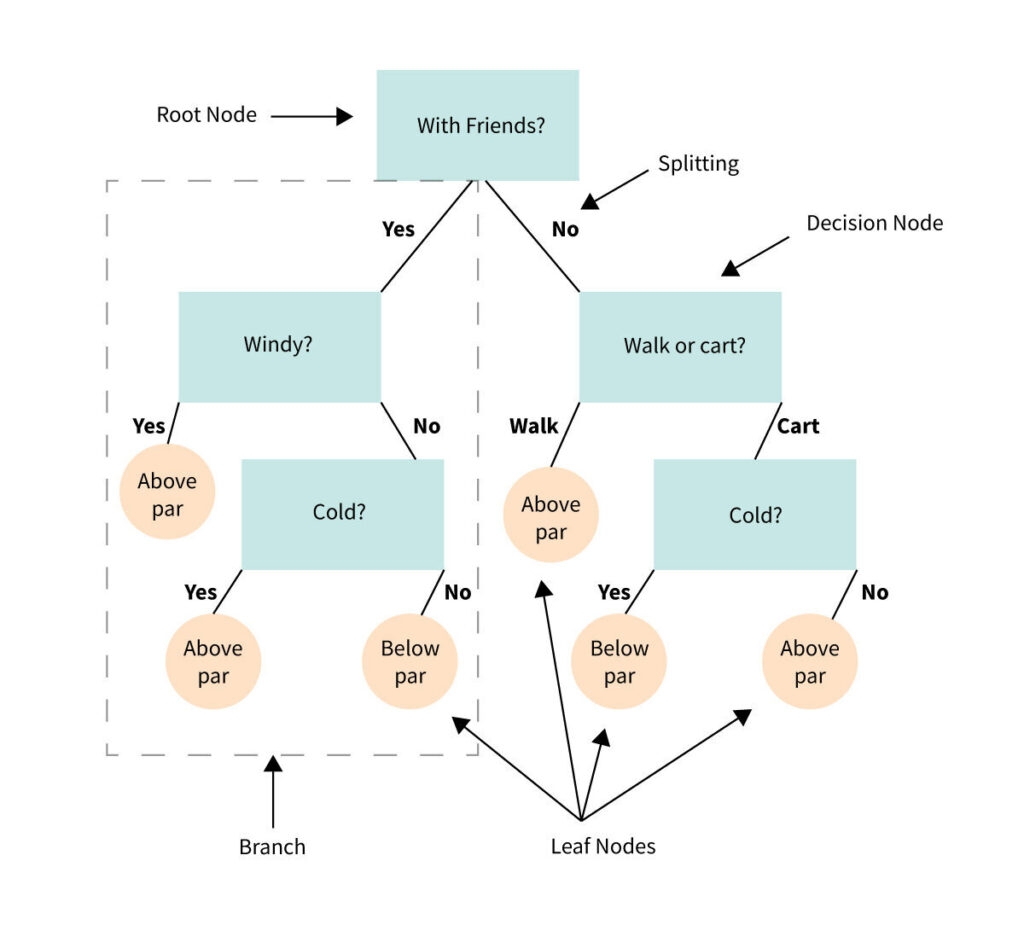

Decision Trees are versatile machine learning algorithms used for both classification and regression tasks[1][2]. They work by recursively partitioning data based on feature values, creating a tree-like structure where each internal node represents a decision based on an attribute, and each leaf node corresponds to a class label or predicted value[1][4].

The algorithm selects the best attribute to split the data at each node using criteria such as information gain, Gini impurity, or chi-square tests[1][3]. This process continues until a stopping criterion is met, such as reaching a maximum depth or having a minimum number of instances in a leaf node[1].

Decision Trees offer several advantages:

- Easy interpretation: Their hierarchical structure provides clear insights into the decision-making process[2][4].

- Minimal data preparation: They can handle both numerical and categorical data without extensive preprocessing[2].

- Flexibility: Decision Trees can be used for both classification and regression tasks[2].

However, they also have some limitations:

- Overfitting: Complex trees may not generalize well to new data[1][2].

- Instability: Small changes in the data can lead to significant changes in the tree structure[4].

To mitigate these issues, techniques such as pruning and ensemble methods like Random Forests are often employed[1][2].

Decision Trees are widely used in various applications, including:

- Data mining and knowledge discovery

- Financial analysis and risk assessment

- Medical diagnosis

- Customer segmentation

In practice, Decision Trees are implemented using popular machine learning libraries such as scikit-learn in Python[3][4]. These implementations often include hyperparameters to control tree growth and prevent overfitting, such as maximum depth, minimum samples per leaf, and minimum samples for splitting[4].

Despite their limitations, Decision Trees remain a fundamental algorithm in machine learning due to their interpretability and versatility. They serve as building blocks for more advanced ensemble methods and continue to be valuable tools for data analysis and prediction tasks[1][2][4].

Further Reading

1. Decision Tree in Machine Learning – GeeksforGeeks

2. What is a Decision Tree? | IBM

3. Decision Trees in Machine Learning: Two Types (+ Examples) | Coursera

4. A Complete Guide to Decision Trees | Paperspace Blog

5. Decision Tree – GeeksforGeeks

Description:

Classification and regression tasks with a tree-like model of decisions.

IoT Scenes:

Fault detection, decision-making systems, and feature importance analysis.

Fault Diagnosis: Identifying and diagnosing issues based on sensor data.

Decision Support Systems: Providing actionable insights based on historical data.

Quality Assurance: Ensuring product quality through decision-making models.

Energy Usage Optimization: Making decisions to optimize energy consumption based on data.