Ensemble methods are powerful techniques in machine learning that enhance predictive performance by combining multiple models. This approach often yields better results than any single model could achieve alone. The rationale behind ensemble methods is that by aggregating the predictions from various models, the overall accuracy can be improved, particularly when the individual models have different strengths and weaknesses.

Categories of Ensemble Methods

Ensemble methods can be broadly classified into two main categories: sequential and parallel ensemble techniques.

Sequential Ensemble Techniques

In sequential ensemble methods, models are generated in a sequence. Each model attempts to correct the errors made by its predecessor. A prominent example of this approach is Boosting. Boosting algorithms, such as AdaBoost and Gradient Boosting, focus on training weak learners iteratively, with each new learner focusing on the mistakes of the previous ones. This sequential learning process helps to create a strong predictive model from multiple weak learners, thereby improving accuracy significantly[1][2][3].

Parallel Ensemble Techniques

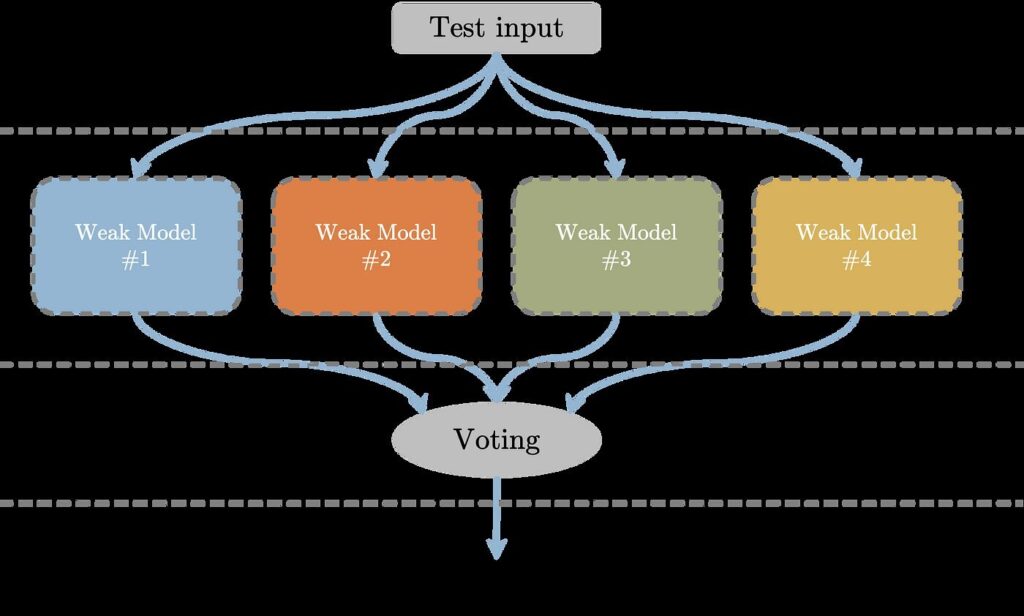

Parallel ensemble methods, on the other hand, generate multiple models simultaneously. A well-known example is Bagging (Bootstrap Aggregating), which involves training multiple models on different subsets of the training data. The final prediction is made by averaging the predictions of all models (for regression) or by majority voting (for classification). Bagging helps to reduce variance and prevent overfitting, making it particularly effective for unstable models like decision trees[1][3][4].

Common Ensemble Techniques

-

Bagging: This technique creates multiple versions of a predictor by sampling the training set with replacement. Each model is trained independently, and their predictions are aggregated to form a final output. Bagging is particularly effective in reducing variance and is commonly used with decision trees[1][5].

-

Boosting: Boosting combines weak learners sequentially, where each new model is trained to correct the errors of the previous ones. This method emphasizes the misclassified instances, allowing the ensemble to focus on difficult cases. Popular boosting algorithms include AdaBoost, Gradient Boosting, and XGBoost[1][2][4].

-

Stacking: Also known as stacked generalization, this method involves training multiple models and then using another model to learn how to best combine their predictions. Stacking can utilize diverse types of models, making it a flexible and powerful ensemble technique[2][3].

Advantages of Ensemble Methods

Ensemble methods provide several advantages:

-

Improved Accuracy: By combining multiple models, ensemble methods can achieve higher accuracy than individual models, particularly in complex datasets.

-

Reduced Overfitting: Techniques like bagging help mitigate overfitting by averaging predictions, thus stabilizing the model’s performance.

-

Robustness: Ensembles tend to be more robust to noise and outliers in the data, as the aggregation process can smooth out individual model errors[3][4].

In summary, ensemble methods are a fundamental aspect of modern machine learning, leveraging the strengths of multiple models to enhance predictive performance and reliability. Their ability to reduce variance and improve accuracy makes them a popular choice in various applications, from classification tasks to regression problems.

Further Reading

1. Ensemble Methods – Overview, Categories, Main Types

2. A Gentle Introduction to Ensemble Learning Algorithms – MachineLearningMastery.com

3. Ensemble Methods in Machine Learning | Toptal®

4. Introduction to Ensemble Methods. An ensemble method is a powerful… | by Dr. Roi Yehoshua | Towards AI

5. Introduction to Bagging and Ensemble Methods | Paperspace Blog

Description:

Combining multiple models to improve prediction accuracy and robustness.

IoT Scenes:

Classification, regression, and ensemble-based anomaly detection.

Classification and Regression: Improving accuracy and robustness of predictions from sensor data.

Anomaly Detection: Combining multiple models to enhance anomaly detection capabilities.

Predictive Analytics: Enhancing predictive modeling for various IoT applications.

Decision Making: Aggregating multiple decision-making models for better outcomes.