Gated Recurrent Units (GRUs) are a type of recurrent neural network architecture introduced in 2014 by Kyunghyun Cho et al. as a simpler alternative to Long Short-Term Memory (LSTM) networks[1][2]. GRUs are designed to solve the vanishing gradient problem that standard RNNs face when processing long sequences[2].

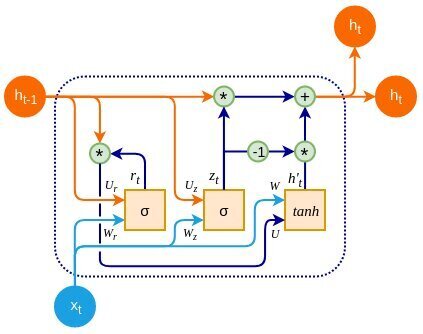

The key feature of GRUs is their gating mechanism, which consists of two gates:

- Update gate: Determines how much of the past information should be passed along to the future

- Reset gate: Decides how much of the past information to forget

These gates allow GRUs to selectively update or reset their memory content, enabling them to capture long-term dependencies in sequential data[3].

The main equations governing a GRU cell are:

$$

\begin{aligned}

z_t &= \sigma(W_z x_t + U_z h_{t-1} + b_z) \

r_t &= \sigma(W_r x_t + U_r h_{t-1} + b_r) \

\tilde{h}t &= \tanh(W_h x_t + U_h(r_t \odot h{t-1}) + b_h) \

h_t &= (1 – z_t) \odot h_{t-1} + z_t \odot \tilde{h}_t

\end{aligned}

$$

Where $z_t$ is the update gate, $r_t$ is the reset gate, $\tilde{h}_t$ is the candidate hidden state, and $h_t$ is the final hidden state[1].

GRUs have several advantages:

- Simpler structure compared to LSTMs, resulting in faster computation[4]

- Ability to capture both short-term and long-term dependencies in sequences[4]

- Performance comparable to LSTMs on various tasks, including polyphonic music modeling, speech signal modeling, and natural language processing[1]

Despite their effectiveness, the choice between GRUs and LSTMs often depends on the specific task and dataset. Researchers have found that gating mechanisms are generally helpful, but there is no definitive conclusion on which of the two architectures is superior[1].

GRUs have been successfully applied in various fields, including:

- Natural language processing

- Speech recognition

- Machine translation

- Time series analysis

As a simplified version of LSTMs, GRUs offer a balance between computational efficiency and modeling power, making them a popular choice for many sequence modeling tasks in deep learning[2][3][4].

Further Reading

1. Gated recurrent unit – Wikipedia

2. Understanding GRU Networks. In this article, I will try to give a… | by Simeon Kostadinov | Towards Data Science

3. Gated Recurrent Unit Networks – GeeksforGeeks

4. 10.2. Gated Recurrent Units (GRU) — Dive into Deep Learning 1.0.3 documentation

5. Gated Recurrent Unit – an overview | ScienceDirect Topics

Description:

Efficient handling of sequential data, similar to LSTMs but with a simplified structure.

IoT Scenes:

Time-series analysis, anomaly detection, and sequential pattern recognition.

Real-Time Anomaly Detection: Monitoring sensor data for immediate anomaly detection.

Predictive Analytics: Forecasting demand and maintenance needs in real-time.

Smart Home Systems: Managing and predicting user behavior for automation.

Sensor Data Analysis: Processing and interpreting continuous streams of data from IoT devices.