Generative Pre-trained Transformers (GPT) are a family of neural network models developed by OpenAI, based on the transformer architecture. These models are designed to generate human-like text by leveraging deep learning techniques and are used in various natural language processing (NLP) tasks, such as language translation, text summarization, and content generation.

Architecture and Training

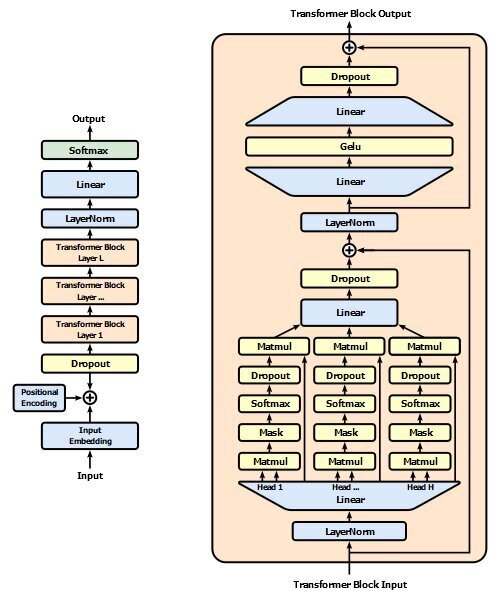

GPT models utilize the transformer architecture, which employs self-attention mechanisms to process input sequences in parallel, rather than sequentially as done by traditional recurrent neural networks. This parallel processing capability allows transformers to handle long-range dependencies in text more efficiently and effectively[1][2].

The training process of GPT involves two main stages: pre-training and fine-tuning. During the pre-training phase, the model is trained on a massive corpus of text data in an unsupervised manner. This involves predicting the next word in a sequence, given all previous words, which helps the model learn the statistical properties and structure of natural language[3][5]. After pre-training, the model undergoes fine-tuning on specific tasks using labeled datasets, allowing it to adapt to particular applications while leveraging the general language understanding acquired during pre-training[3].

Evolution and Versions

The first GPT model, GPT-1, was introduced in 2018. Since then, OpenAI has released several iterations, each with increased capabilities due to larger model sizes and more extensive training datasets. GPT-3, for instance, was trained with 175 billion parameters on 45 terabytes of data, making it one of the largest language models at the time of its release[2]. The latest version, GPT-4, introduced in March 2023, is a multimodal model capable of processing both text and image inputs, although its output remains text-based[1][2].

Applications and Impact

GPT models have been widely adopted across various industries due to their ability to generate coherent and contextually relevant text. They are used in applications such as chatbots, automated content creation, language translation, and even complex tasks like writing code or conducting research[2][5]. The models’ few-shot learning capabilities allow them to perform well on new tasks with minimal additional training, making them highly versatile and efficient for different NLP applications[5].

Challenges and Ethical Considerations

Despite their impressive capabilities, GPT models are not without limitations. One significant challenge is the potential for bias, as these models learn from the data they are trained on, which may contain biases and stereotypes. This can lead to biased or inappropriate text generation, raising ethical concerns about their use[3]. Researchers are actively exploring methods to mitigate these biases, such as using more diverse training data or modifying the model’s architecture to account for biases explicitly[3].

Conclusion

GPT models represent a significant advancement in the field of NLP, offering powerful tools for generating human-like text and performing various language-related tasks. Their ability to process large amounts of data and generate coherent text has numerous practical applications, from enhancing customer service to automating content creation. However, it is crucial to address the ethical challenges associated with these models to ensure their responsible and beneficial use.

References:

1. Wikipedia

2. AWS

3. Encord

4. arXiv

5. Moveworks

Further Reading

1. Generative pre-trained transformer – Wikipedia

2. 什么是 GPT AI?- 生成式预训练转换器详解 – AWS

3. Generative Pre-Trained Transformer (GPT) definition | Encord

4. [2305.10435] Generative Pre-trained Transformer: A Comprehensive Review on Enabling Technologies, Potential Applications, Emerging Challenges, and Fut…

5. What is a Generative Pre-Trained Transformer? | Moveworks