The Inception Model is a deep convolutional neural network architecture introduced by Google researchers in 2014. It gained prominence by winning the ImageNet Large Scale Visual Recognition Challenge (ILSVRC14) and has since been influential in the field of computer vision.

Inception Modules

Key Features

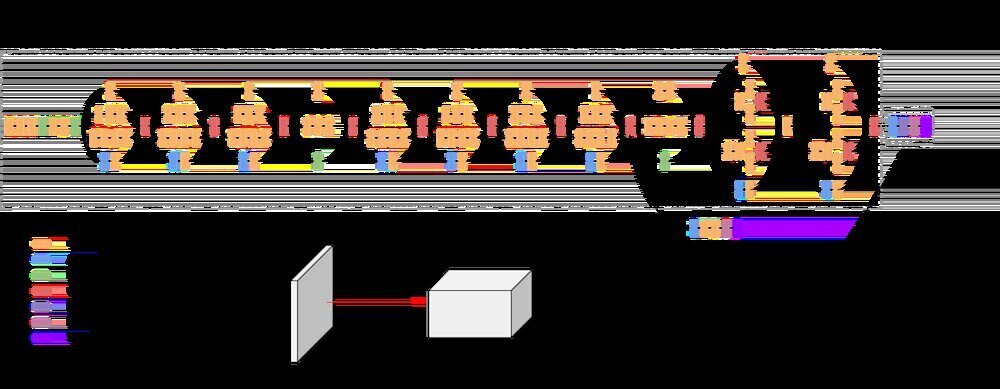

The core innovation of the Inception Model is the Inception Module, which allows for multi-level feature extraction through the simultaneous application of several convolutional filters of different sizes (e.g., 1×1, 3×3, 5×5) and a pooling operation. This design enables the network to capture information at various scales and complexities within the same layer, enhancing its ability to learn robust features[5].

Dimensionality Reduction

A crucial aspect of the Inception Module is the use of 1×1 convolutions for dimensionality reduction. This technique reduces the computational complexity and the number of parameters without sacrificing the depth of the network. By applying 1×1 convolutions before the larger convolutions, the model can significantly decrease the number of operations required, making it more efficient[2][5].

Concatenation

After applying the different filters and pooling, the outputs are concatenated along the channel dimension. This ensures that subsequent layers have access to features extracted at different scales, which is vital for capturing intricate patterns in the data[5].

Evolution of Inception Models

Inception v1 (GoogLeNet)

The first iteration, known as GoogLeNet, consists of 22 layers (27 including pooling layers) and employs nine Inception Modules. It replaced fully connected layers with global average pooling, which dramatically reduced the number of parameters and mitigated overfitting[2].

Inception v2 and v3

Inception v2 and v3 introduced several upgrades to improve accuracy and reduce computational complexity. One key innovation was the factorization of convolutions, such as replacing a 5×5 convolution with two 3×3 convolutions, which is computationally more efficient. These versions also expanded filter banks to avoid representational bottlenecks and employed auxiliary classifiers to combat the vanishing gradient problem during training[1].

Inception v4 and Inception-ResNet

Inception v4 and Inception-ResNet further refined the architecture by incorporating residual connections, which help in training very deep networks by ensuring better gradient flow. These versions also introduced specialized reduction blocks to manage the dimensions of the feature maps effectively[1].

Applications

Inception Models have been successfully applied to various computer vision tasks, including:

- Image classification

- Object detection

- Face recognition

- Image segmentation

Their ability to capture multi-scale information makes them particularly effective in scenarios where detailed feature extraction is crucial for accurate predictions[5].

Conclusion

The Inception Model represents a significant advancement in convolutional neural network architectures. Its innovative approach to multi-scale feature extraction and dimensionality reduction has set a precedent for subsequent neural network designs, contributing to state-of-the-art performance in many computer vision tasks[5].

Further Reading

1. A Simple Guide to the Versions of the Inception Network | by Bharath Raj | Towards Data Science

2. ML | Inception Network V1 – GeeksforGeeks

3. A guide to Inception Model in Keras –

4. Deep Learning: Understanding The Inception Module | by Richmond Alake | Towards Data Science

5. Inception Module Definition | DeepAI