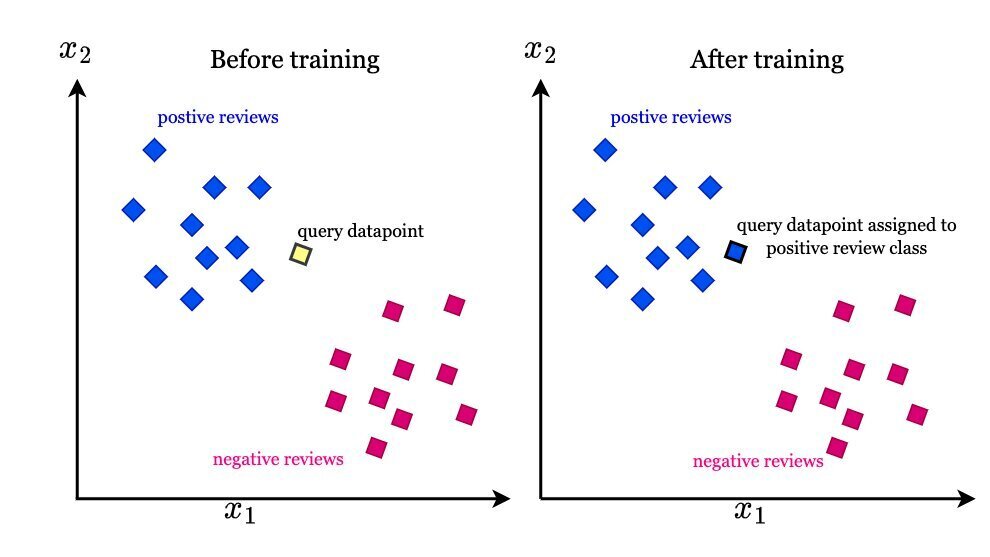

K-Nearest Neighbors (KNN) is a fundamental algorithm in machine learning used for classification and regression tasks. It operates on the principle of identifying the ‘k’ closest data points (neighbors) to a given input and making predictions based on the majority class or average value of these neighbors.

Overview of KNN

The KNN algorithm is non-parametric and does not make any assumptions about the underlying data distribution, making it versatile for various applications. It was first developed by Evelyn Fix and Joseph Hodges in 1951 and later expanded by Thomas Cover[4].

How KNN Works

-

Distance Measurement: KNN relies on a distance metric to determine the proximity of data points. The most commonly used distance measure is Euclidean distance, but other metrics like Manhattan distance can also be employed[1][3].

-

Choosing ‘k’: The parameter ‘k’ represents the number of neighbors considered for making predictions. A smaller value of ‘k’ can lead to a model that is sensitive to noise, while a larger value may smooth out the decision boundary too much, potentially ignoring important patterns. A common heuristic is to set ‘k’ to the square root of the number of observations in the training dataset[2][4].

-

Classification and Regression:

- Classification: For classification tasks, KNN assigns the class label based on the majority vote among the ‘k’ nearest neighbors. For instance, if ‘k’ is 5, and three neighbors belong to class A while two belong to class B, the new data point is classified as class A[3][5].

- Regression: In regression tasks, KNN predicts the output value by averaging the values of the ‘k’ nearest neighbors[4].

Advantages and Disadvantages

Advantages:

-

Simplicity: KNN is easy to implement and understand, making it a popular choice for beginners in machine learning.

-

Flexibility: It can handle both numerical and categorical data, which allows it to be used in a wide range of applications[3].

Disadvantages:

-

Computationally Intensive: KNN can be slow for large datasets because it requires calculating the distance to all training samples for each prediction[3][5].

-

Curse of Dimensionality: The algorithm’s performance may degrade as the number of features increases, making it less effective in high-dimensional spaces[3].

-

Sensitive to Noise: KNN can be affected by irrelevant features and outliers in the dataset, which can lead to inaccurate predictions[4].

Conclusion

K-Nearest Neighbors is a powerful yet straightforward algorithm that serves as a great introduction to machine learning concepts. Its effectiveness in various domains, from pattern recognition to data mining, underscores its importance in the field. Despite its limitations, KNN remains a widely used technique due to its intuitive nature and ease of implementation[2][3][4].

Further Reading

1. Introduction to machine learning: k-nearest neighbors – PMC

2. A Simple Introduction to K-Nearest Neighbors Algorithm | by Dhilip Subramanian | Towards Data Science

3. K-Nearest Neighbor(KNN) Algorithm – GeeksforGeeks

4. k-nearest neighbors algorithm – Wikipedia

5. https://www.javatpoint.com/k-nearest-neighbor-algorithm-for-machine-learning

Description:

Classification and regression based on proximity to nearest neighbors.

IoT Scenes:

Pattern recognition, recommendation systems, and anomaly detection.

Pattern Recognition: Classifying sensor data based on similarity to known patterns.

Anomaly Detection: Identifying anomalies by comparing data points to nearest neighbors.

Recommendation Systems: Providing recommendations based on user behavior data.

Fault Detection: Detecting faults by comparing sensor readings to known faulty patterns.