Localization and mapping are fundamental challenges in robotics and computer vision, enabling autonomous systems to understand their environment and navigate within it[1]. One of the most prominent approaches to tackle this problem is Simultaneous Localization and Mapping (SLAM), which allows a robot or device to construct a map of an unknown environment while simultaneously tracking its own position[1][2].

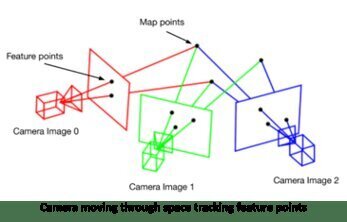

SLAM algorithms typically use sensor data from cameras, LiDAR, or other sensors to build a representation of the environment. Visual SLAM, which relies on camera input, has gained significant attention due to the widespread availability of cameras in mobile devices and robots[2]. These systems often employ feature detection and matching techniques to track the camera’s motion and reconstruct the 3D structure of the scene.

Recent advancements in deep learning have led to the integration of neural networks into SLAM systems, enhancing their robustness and accuracy[2][4]. For instance, deep learning models can be used for feature extraction, loop closure detection, or even end-to-end SLAM solutions. This fusion of traditional geometric approaches with learning-based methods has shown promising results in various challenging scenarios.

Another important aspect of localization and mapping is object detection and recognition. YOLOv4 (You Only Look Once version 4) is a state-of-the-art real-time object detection system that can be adapted for localization tasks[3]. By combining YOLOv4’s object detection capabilities with localization techniques, it’s possible to not only identify objects in the environment but also estimate their positions relative to the camera or robot.

The integration of object detection models like YOLOv4 with SLAM systems can lead to semantically rich maps, where objects are not only localized but also classified. This semantic understanding of the environment is crucial for higher-level tasks such as navigation, manipulation, and human-robot interaction[1][2].

As the field progresses, researchers are exploring ways to make localization and mapping systems more efficient, accurate, and adaptable to different environments. This includes developing methods for multi-sensor fusion, handling dynamic environments, and improving the scalability of SLAM algorithms for large-scale mapping[1][2].

Key Components of Modern Localization and Mapping Systems

- Sensor data processing (e.g., visual features, point clouds)

- Motion estimation and tracking

- Map representation and update

- Loop closure detection

- Object detection and semantic labeling

- Multi-sensor fusion

- Deep learning integration for various subtasks

By combining these components, localization and mapping systems can provide autonomous agents with a comprehensive understanding of their surroundings, enabling them to operate effectively in complex, real-world environments.

Further Reading

1. Simultaneous localization and mapping – Wikipedia

2. Five Things You Need to Know About SLAM Simultaneous Localization and Mapping | BasicAI’s Blog

3. Visual SLAM in the era of Deep Learning | AI Talks – YouTube

4. GitHub – changhao-chen/deep-learning-localization-mapping: A collection of deep learning based localization models

5. Role of AI in Robot Localization and Mapping