Azure IoT Edge ML modules enable the deployment of machine learning models directly on edge devices, allowing for real-time data processing and inference without relying on constant cloud connectivity. This capability is particularly beneficial in scenarios where bandwidth is limited or where immediate responses are crucial, such as in industrial applications.

Overview of Azure IoT Edge ML Modules

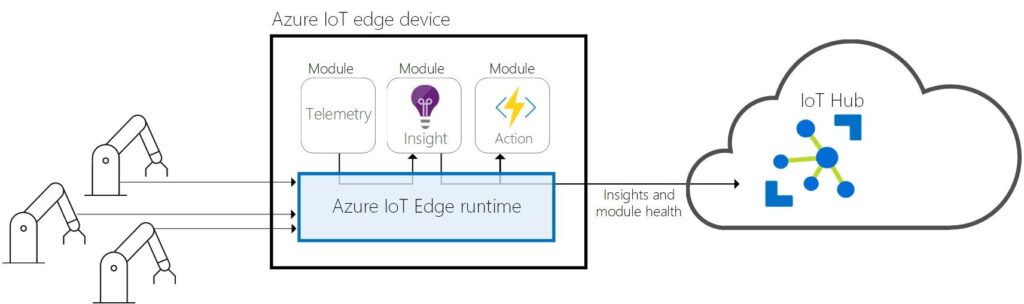

Azure IoT Edge modules are essentially Docker-compatible containers that can run various workloads, including machine learning models. These modules can execute Azure services, third-party services, or custom code locally on IoT devices. This architecture allows for significant reductions in latency and bandwidth usage, as data can be processed at the source rather than being sent to the cloud for analysis[3][4].

Key Features

-

Machine Learning Inference: Azure IoT Edge supports the deployment of machine learning models, such as TensorFlow Lite or ONNX models, enabling capabilities like image classification and object detection directly on the device. This is crucial for applications in sectors like manufacturing, where quick decision-making is essential[1].

-

Dynamic Model Updates: The IoT Edge architecture allows for the dynamic loading of AI models. This means that models can be updated incrementally, minimizing the amount of data transferred over the network, which is particularly useful in environments with limited connectivity[1].

-

Module Twins: Each module has a corresponding module twin, which is a JSON document that stores the state information of the module instance. This allows for easy management and configuration of the modules, enabling them to operate effectively even when offline[2][4].

-

Offline Functionality: IoT Edge modules can function offline indefinitely after an initial synchronization with the Azure IoT Hub. This feature ensures that edge devices can continue to operate and process data even in the absence of a stable internet connection[2][3].

-

Integration with Azure Services: Azure IoT Edge seamlessly integrates with other Azure services, such as Azure Machine Learning and Azure Stream Analytics, allowing users to leverage existing cloud-based models and analytics capabilities on their edge devices[4].

Deployment and Management

To deploy a machine learning model as an IoT Edge module, developers can containerize their models and expose them through a REST API. The deployment process involves creating a deployment manifest that specifies the module configuration and routes for communication between different modules[5]. This modular approach not only simplifies the deployment of complex applications but also enhances the scalability and maintainability of IoT solutions.

In summary, Azure IoT Edge ML modules represent a powerful tool for organizations looking to harness the capabilities of machine learning at the edge. By enabling real-time processing and reducing reliance on cloud connectivity, these modules facilitate faster and more efficient IoT solutions across various industries.

Further Reading

1. Enable machine learning inference on an Azure IoT Edge device – Azure Architecture Center | Microsoft Learn

2. Learn how modules run logic on your devices – Azure IoT Edge | Microsoft Learn

3. IoT Edge | Cloud Intelligence | Microsoft Azure

4. What is Azure IoT Edge | Microsoft Learn

5. azure machine learning service – How to deploy a ML model as a local iot edge module – Stack Overflow