Random Forest is a powerful ensemble learning algorithm widely used in machine learning for both classification and regression tasks. It operates by constructing a multitude of decision trees during training and outputs the mode of the classes (classification) or mean prediction (regression) of the individual trees. This method enhances the model’s accuracy and robustness compared to a single decision tree.

How Random Forest Works

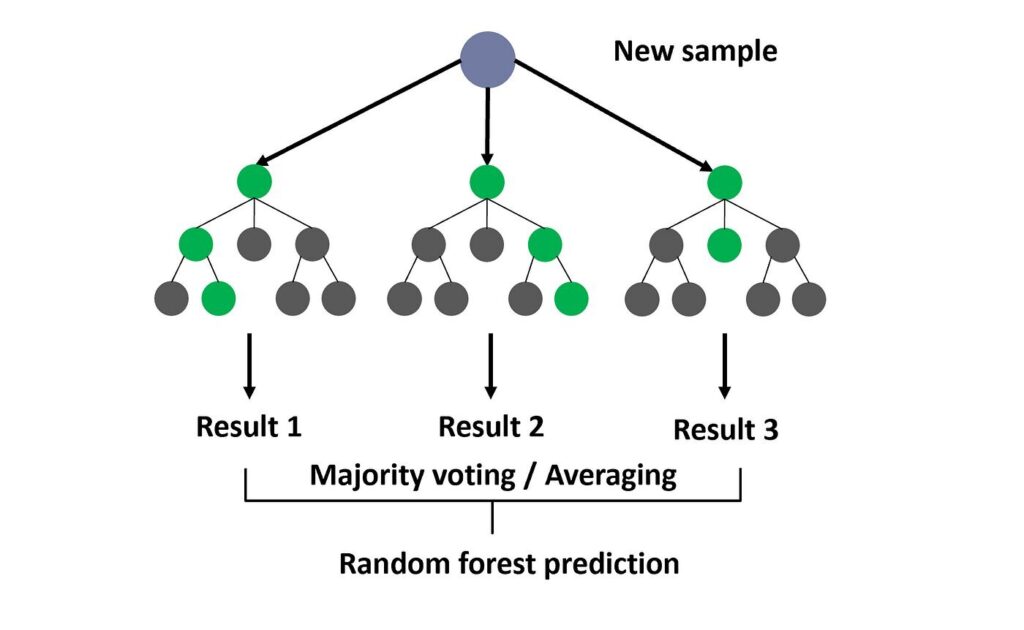

The Random Forest algorithm employs two main techniques to build its ensemble of decision trees:

-

Bootstrapping: Each tree is trained on a random subset of the training data, selected with replacement. This means some data points may be repeated in the subset while others may be omitted, creating diverse trees.

-

Feature Randomness: When splitting a node during the tree construction, a random subset of features is considered rather than all available features. This randomness helps to ensure that the trees are uncorrelated, which is crucial for the ensemble’s effectiveness.

The final prediction is made by aggregating the predictions from all the trees, which helps to mitigate overfitting—a common issue with individual decision trees[1][3].

Advantages of Random Forest

-

High Accuracy: Random Forest often provides high accuracy due to its ensemble nature, which reduces variance and overfitting.

-

Robustness: It is less sensitive to noise and can handle large datasets with higher dimensionality.

-

Feature Importance: Random Forest can provide insights into the importance of different features in the prediction process, aiding in feature selection and model interpretation[2][4].

-

Versatility: The algorithm can be applied to various domains, including healthcare, finance, and marketing, making it a popular choice among data scientists[2][5].

Applications

Random Forest has been successfully implemented in numerous fields, including:

-

Healthcare: For predicting patient outcomes based on various clinical features.

-

Finance: In credit scoring and risk assessment.

-

Marketing: For customer segmentation and predicting customer behavior.

Overall, Random Forest stands out as a flexible and easy-to-use algorithm that frequently delivers strong results without extensive parameter tuning, making it a go-to choice for many machine learning practitioners[3][5].

Further Reading

1. What Is Random Forest? | IBM

2. https://einsteinmed.edu/uploadedfiles/centers/ictr/new/intro-to-random-forest.pdf

3. Random Forest: A Complete Guide for Machine Learning | Built In

4. Random forest – Wikipedia

5. An Introduction to Random Forest | by Houtao Deng | Towards Data Science

Description:

Ensemble method using multiple decision trees to improve prediction accuracy.

IoT Scenes:

Anomaly detection, predictive maintenance, and classification tasks.

Anomaly Detection: Identifying anomalies in sensor data through ensemble learning.

Predictive Maintenance: Predicting equipment failures and maintenance needs.

Classification Tasks: Categorizing data from IoT sensors into predefined classes.

Resource Management: Optimizing resource allocation and usage based on data analysis.