ResNet (Residual Networks) is a groundbreaking deep learning architecture introduced in 2015 by researchers at Microsoft Research[1][3]. It addresses the problem of vanishing gradients in very deep neural networks, allowing the training of networks with hundreds of layers[2].

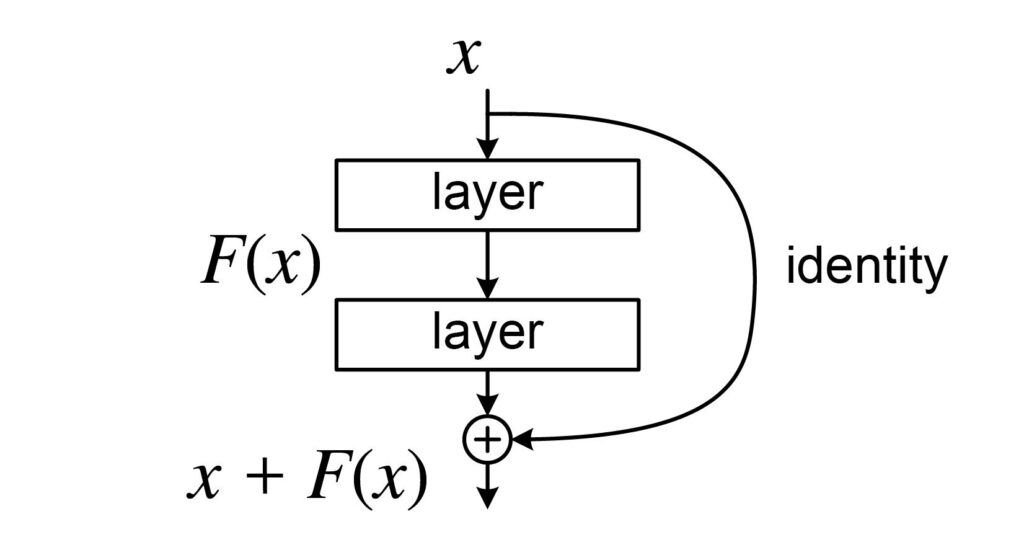

The key innovation of ResNet is the introduction of “skip connections” or “shortcut connections” that bypass one or more layers[1][3]. These connections allow the network to learn residual functions with reference to the layer inputs, rather than trying to learn the entire underlying mapping[3]. This approach enables much deeper networks to be trained effectively.

The basic building block of ResNet is the residual block, which can be expressed as:

$$y = F(x) + x$$

Where $x$ is the input to the block, $F(x)$ is the residual function learned by the stacked layers, and $y$ is the output[3]. The addition of $x$ represents the skip connection.

ResNet architecture comes in various depths, with ResNet-50 and ResNet-101 being popular variants[4]. These networks are constructed by stacking multiple residual blocks.

Key benefits of ResNet include:

- Ability to train very deep networks (over 100 layers) without degradation in performance[1][2]

- Improved gradient flow through the network[3]

- State-of-the-art performance on various computer vision tasks[1][4]

ResNet’s impact extends beyond computer vision. Its principles have been applied to recurrent neural networks, transformers, and graph neural networks[5]. The residual learning approach has become a fundamental concept in deep learning, influencing the design of many subsequent architectures.

References

[1] He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep Residual Learning for Image Recognition. arXiv:1512.03385.

[2] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks.

[3] He, K., Zhang, X., Ren, S., & Sun, J. (2016). Identity Mappings in Deep Residual Networks.

[4] Chollet, F. (n.d.). GitHub repository: https://github.com/fchollet/deep-learning-models/blob/master/resnet50.py

[5] Vaswani, A., et al. (2017). Attention Is All You Need.

Further Reading

1. Residual Networks (ResNet) – Deep Learning – GeeksforGeeks

2. Introduction to ResNets. This Article is Based on Deep Residual… | by Connor Shorten | Towards Data Science

3. Residual neural network – Wikipedia

4. Residual Networks (ResNets). In earlier posts, we saw the… | by Muhammad Rizwan Khan | Towards Data Science

5. 8.6. Residual Networks (ResNet) and ResNeXt — Dive into Deep Learning 1.0.3 documentation