RetinaNet is a state-of-the-art one-stage object detection model introduced by Facebook AI Research (FAIR). It addresses the accuracy limitations of single-stage detectors by incorporating two key innovations: Feature Pyramid Networks (FPN) and Focal Loss.

Feature Pyramid Network (FPN)

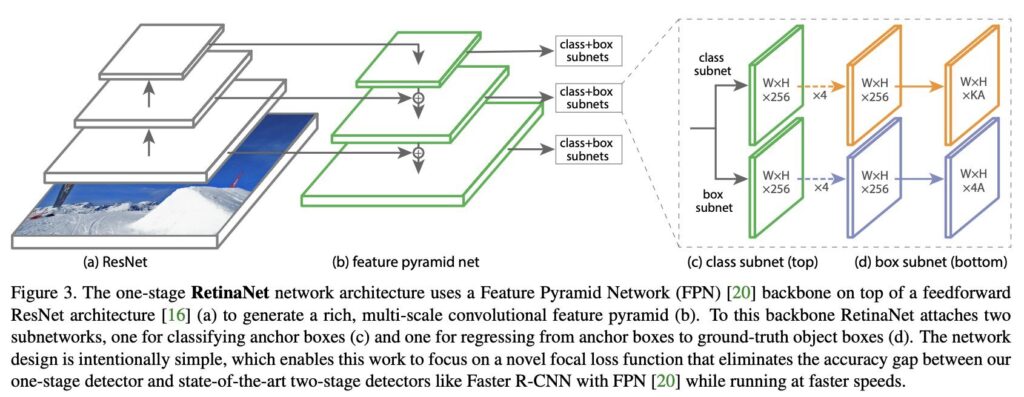

The FPN is built on top of a ResNet backbone and generates a rich, multi-scale feature pyramid from a single-resolution input image. It employs a top-down approach with lateral connections to construct feature maps at different scales, enhancing the model’s ability to detect objects of various sizes[1][2][4].

Focal Loss

Focal Loss is designed to handle the extreme class imbalance problem in one-stage detectors. Traditional loss functions like Cross-Entropy Loss can be overwhelmed by the vast number of easy negative samples. Focal Loss reduces the loss contribution from these easy examples and focuses more on hard, misclassified examples, thereby improving the model’s accuracy[1][2][5].

Architecture

RetinaNet’s architecture consists of three main components:

1. Backbone Network: Typically a ResNet, which computes convolutional feature maps over the entire image.

2. Classification Subnet: A fully convolutional network (FCN) that predicts the probability of an object being present at each spatial location for each anchor box.

3. Regression Subnet: Another FCN that regresses the offsets for the bounding boxes from the anchor boxes for each ground-truth object[1][4][5].

Performance

RetinaNet achieves state-of-the-art performance by surpassing the accuracy of many two-stage detectors like Faster R-CNN. It strikes a balance between speed and precision, making it suitable for real-time applications such as autonomous driving and robotics[2][5].

Implementation

RetinaNet can be implemented using frameworks like arcgis.learn with the following code:

python

model = RetinaNet(data, backbone='resnet50')

Key parameters include the data prepared for training, the backbone model (e.g., ResNet50), scales of anchor boxes, and aspect ratios of anchor boxes[1].

Conclusion

RetinaNet’s combination of FPN and Focal Loss enables it to handle dense and small-scale objects effectively, making it one of the best one-stage object detection models available today[1][2][5].

References

- ArcGIS API for Python: How RetinaNet works [1]

- Towards Data Science: Review: RetinaNet — Focal Loss (Object Detection) [2]

- Towards Data Science: RetinaNet: The beauty of Focal Loss [4]

- Viso.ai: RetinaNet: Single-Stage Object Detector with Accuracy Focus [5]

Further Reading

1. How RetinaNet works? | ArcGIS API for Python

2. Review: RetinaNet — Focal Loss (Object Detection) | by Sik-Ho Tsang | Towards Data Science

3. RetinaNet Explained and Demystified – Zenggyu的博客

4. RetinaNet: The beauty of Focal Loss | by Preeyonuj Boruah | Towards Data Science

5. RetinaNet: Single-Stage Object Detector with Accuracy Focus – viso.ai