RNN-Based Models: Capturing Sequential Dependencies

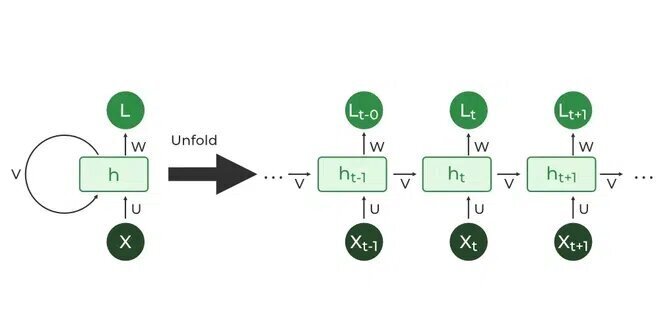

Recurrent Neural Networks (RNNs) are a class of artificial neural networks designed to process sequential data by maintaining an internal state or “memory”[1]. Unlike traditional feedforward networks, RNNs can use their internal state to process sequences of inputs, making them particularly well-suited for tasks involving time series or natural language[2].

The basic RNN architecture consists of a hidden state that is updated at each time step based on the current input and the previous hidden state. This allows the network to capture dependencies over time[1]. However, basic RNNs often struggle with long-term dependencies due to the vanishing gradient problem[3].

To address this limitation, more advanced RNN variants have been developed:

-

Long Short-Term Memory (LSTM): LSTMs introduce a gating mechanism that allows the network to selectively remember or forget information over long periods[4].

-

Gated Recurrent Unit (GRU): A simplified version of LSTM with fewer parameters, GRUs have shown comparable performance in many tasks[2].

-

Bidirectional RNNs: These process sequences in both forward and backward directions, capturing context from both past and future states[5].

RNN-based models have achieved remarkable success in various applications:

- Natural Language Processing: Machine translation, sentiment analysis, and text generation[1][5].

- Speech Recognition: Converting spoken language into text[2].

- Time Series Prediction: Forecasting stock prices or weather patterns[3].

Despite their effectiveness, RNNs face challenges such as computational intensity and difficulty in parallelization. Recent advancements, including attention mechanisms and transformer architectures, have begun to address these issues while building upon the sequential processing capabilities of RNNs[4][5].

Further Reading

1. https://www.geeksforgeeks.org/introduction-to-recurrent-neural-network/

2. Introduction to RNN and LSTM

3. An Introduction to Recurrent Neural Networks and the Math That Powers Them – MachineLearningMastery.com

4. Recurrent Neural Network Tutorial (RNN) | DataCamp

5. What Is a Recurrent Neural Network (RNN)? | IBM