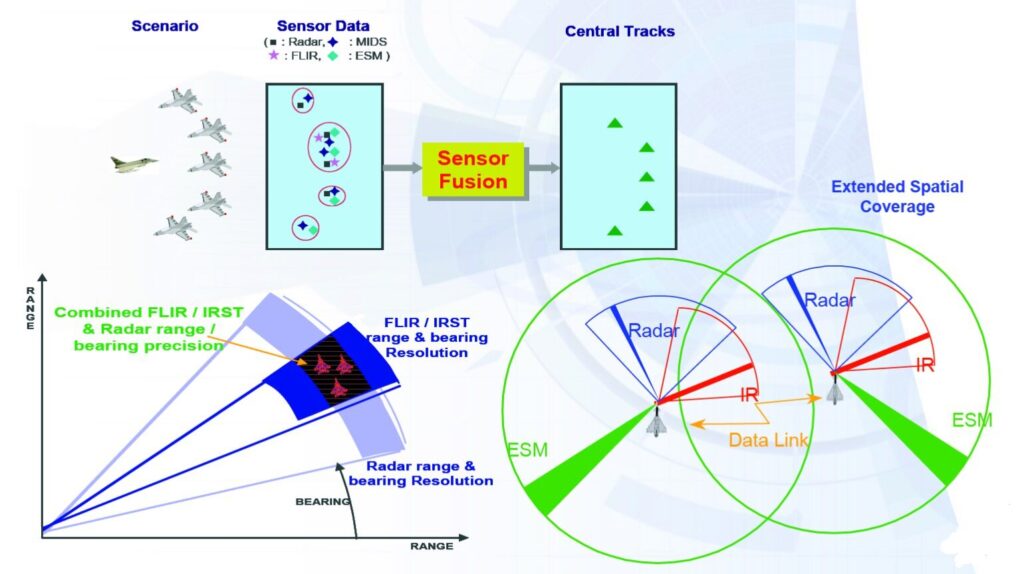

Sensor fusion is a critical technology that combines data from multiple sensors to enhance the accuracy and reliability of information about the environment. This process is essential in various applications, including robotics, autonomous vehicles, and smart cities. By integrating data from different sources, sensor fusion reduces uncertainty and improves decision-making capabilities.

Key Techniques in Sensor Fusion

Kalman Filter

The Kalman filter is one of the most widely used algorithms in sensor fusion. It provides a recursive solution to the linear estimation problem, allowing for the integration of noisy sensor measurements over time. The filter operates in two steps: prediction and update. In the prediction step, the filter estimates the current state based on the previous state and a mathematical model. In the update step, it incorporates new measurements to refine this estimate. The extended Kalman filter (EKF) is a variant that handles nonlinear systems by linearizing around the current estimate, making it suitable for many real-world applications, such as GPS and inertial navigation systems (INS) fusion[2][5].

FusionNet and Deep Learning Approaches

Recent advancements in artificial intelligence have led to the development of deep learning models like FusionNet, which can process and fuse data from various sensors simultaneously. These models leverage convolutional neural networks (CNNs) to extract features from different modalities, such as images and LIDAR data, enabling them to learn complex patterns and relationships in the data. This approach is particularly beneficial in autonomous driving, where it is crucial to integrate information from cameras, radar, and other sensors to create a comprehensive understanding of the environment[3][4].

Particle Filters

Another significant technique in sensor fusion is the particle filter, which is particularly effective for nonlinear and non-Gaussian problems. Unlike the Kalman filter, which relies on Gaussian assumptions, particle filters use a set of particles to represent the probability distribution of the state. Each particle is weighted based on the likelihood of the observed measurements, allowing for robust tracking and estimation even in challenging conditions[5].

Applications of Sensor Fusion

Sensor fusion has numerous applications across various fields:

-

Autonomous Vehicles: By fusing data from cameras, LIDAR, and radar, vehicles can accurately perceive their surroundings, detect obstacles, and navigate safely.

-

Robotics: Robots utilize sensor fusion to combine inputs from various sensors, such as accelerometers and gyroscopes, to maintain balance and navigate complex environments.

-

Smart Cities: Sensor fusion helps in traffic management by integrating data from road sensors, cameras, and GPS to optimize traffic flow and reduce congestion.

-

Healthcare: In biomedical engineering, sensor fusion techniques are used to analyze biosignals from multiple sources, improving diagnostics and patient monitoring[1][2][3].

Conclusion

Sensor fusion is a powerful methodology that enhances the understanding of complex systems by combining data from multiple sensors. Techniques like the Kalman filter, FusionNet, and particle filters play a vital role in achieving accurate and reliable results. As technology advances, the integration of sensor fusion in various applications will continue to grow, driving innovation in fields such as autonomous systems and smart technologies.

Further Reading

1. https://www.researchgate.net/profile/Wilfried-Elmenreich/publication/267771481_An_Introduction_to_Sensor_Fusion/links/55d2e45908ae0a3417222dd9/An-Introduction-to-Sensor-Fusion.pdf

2. Sensor fusion – Wikipedia

3. Sensor Fusion: The Ultimate Guide to Combining Data for Enhanced Perception and Decision-Making

4. Chapter 6: Sensor fusion | NovAtel

5. https://users.aalto.fi/~ssarkka/pub/basics_of_sensor_fusion_2020.pdf