SqueezeNet: A Compact Deep Neural Network for Image Classification

SqueezeNet is a deep neural network designed for image classification, released in 2016 by researchers from DeepScale, University of California, Berkeley, and Stanford University. The primary goal behind SqueezeNet was to create a smaller neural network with fewer parameters while maintaining competitive accuracy levels similar to larger networks like AlexNet.

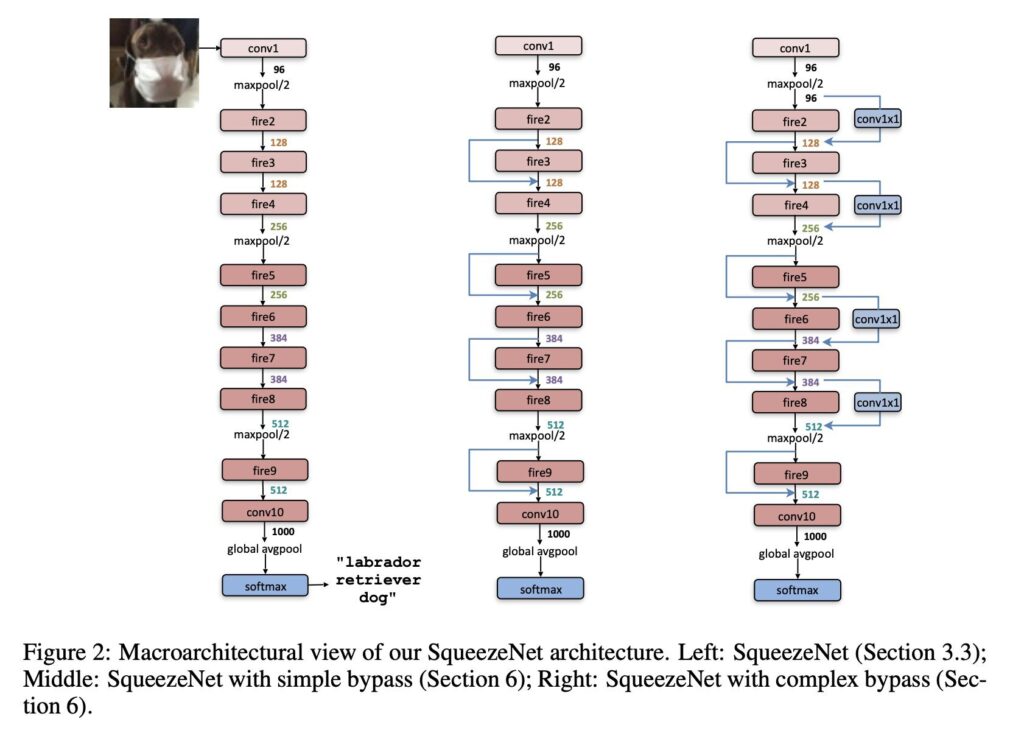

Design and Architecture

SqueezeNet achieves its compact size through several innovative design strategies:

1. Fire Modules: The core building block of SqueezeNet is the Fire module, which consists of a squeeze layer (using 1×1 filters) followed by an expand layer (using a mix of 1×1 and 3×3 filters). This design reduces the number of parameters significantly.

2. Late Pooling: Max-pooling layers are placed after the Fire modules to retain more feature information for a longer duration within the network.

3. Parameter Reduction: By using 1×1 filters extensively and limiting the use of 3×3 filters, SqueezeNet reduces the total number of parameters.

The architecture begins with a standalone convolution layer, followed by eight Fire modules, and ends with a final convolution layer. The network performs max-pooling with a stride of 2 after specific layers to gradually reduce the spatial dimensions of the feature maps[2][5].

Performance and Comparisons

SqueezeNet achieves AlexNet-level accuracy on the ImageNet dataset but with 50 times fewer parameters, making the model size only 5 MB compared to AlexNet’s 240 MB. This compact size allows SqueezeNet to fit more easily into memory and be transmitted over networks more efficiently. Additionally, using model compression techniques like Deep Compression, the model size can be further reduced to less than 0.5 MB[1][4].

Framework Support and Applications

Since its release, SqueezeNet has been ported to various deep learning frameworks, including PyTorch, Apache MXNet, and Apple CoreML. It is also supported by third-party implementations for TensorFlow and other frameworks. SqueezeNet’s small size makes it suitable for deployment on low-power devices such as smartphones and FPGAs[1].

Offshoots and Further Research

Following the success of SqueezeNet, the research team and the broader community have developed several related models:

– SqueezeDet: For object detection.

– SqueezeSeg: For semantic segmentation of LIDAR data.

– SqueezeNext: An enhanced version for image classification.

– SqueezeNAS: For neural architecture search in semantic segmentation[1].

These models continue the trend of creating efficient, resource-friendly neural networks for various applications.

Conclusion

SqueezeNet represents a significant step forward in the design of compact, efficient neural networks, achieving high accuracy with a fraction of the parameters of traditional models. Its impact is evident in its wide adoption and the development of related models for diverse applications.

References:

– [1] Wikipedia, “SqueezeNet”

– [2] OpenReview, “SqueezeNet: AlexNet-level accuracy with 50x fewer parameters”

– [5] CSE, IIT Delhi, “SqueezeNet PDF”

Further Reading

1. SqueezeNet – Wikipedia

2. https://openreview.net/pdf?id=S1xh5sYgx

3. (Not recommended) SqueezeNet convolutional neural network – MATLAB squeezenet

4. [1602.07360] SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size

5. https://www.cse.iitd.ac.in/~rijurekha/course/squeezenet.pdf