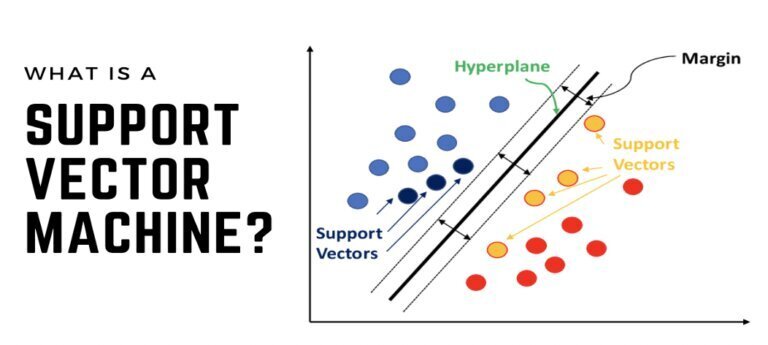

Support Vector Machines (SVMs) are a powerful class of supervised learning algorithms primarily used for classification and regression tasks. The fundamental concept behind SVMs is to identify a hyperplane that best separates the data points of different classes in a high-dimensional space. This hyperplane is chosen to maximize the margin, which is the distance between the hyperplane and the nearest data points from each class, known as support vectors. The greater the margin, the better the model’s generalization to unseen data[1][3].

Key Features of SVMs

-

Binary Classification: SVMs are inherently binary classifiers, meaning they are designed to distinguish between two classes. However, they can be extended to handle multi-class classification problems by employing strategies such as one-vs-one or one-vs-all, where separate binary classifiers are trained for each class[1][4].

-

Kernel Trick: One of the most powerful aspects of SVMs is their ability to handle non-linearly separable data through the use of kernel functions. These functions implicitly map the input features into a higher-dimensional space, allowing for the separation of classes that cannot be divided by a straight line in the original space. Common kernels include the Radial Basis Function (RBF) and polynomial kernels[1][3][5].

-

Support Vectors: The data points that are closest to the hyperplane are termed support vectors. These points are critical as they influence the position and orientation of the hyperplane. Removing a support vector can change the hyperplane, while points that are not support vectors do not affect the model[4][5].

Applications

SVMs are versatile and can be applied to various domains, including:

- Text Classification: Such as spam detection and sentiment analysis.

- Image Recognition: For tasks like face detection and handwriting recognition.

- Bioinformatics: Including gene classification and protein structure prediction[3][5].

Advantages and Limitations

Advantages:

- Effective in high-dimensional spaces and when the number of dimensions exceeds the number of samples.

- Robust against overfitting, especially in high-dimensional space, due to the maximum margin principle[2][3].

Limitations:

- Performance is highly dependent on the choice of the kernel and its parameters.

- SVMs can be less effective on very large datasets and may require significant computational resources for training[2][5].

In summary, Support Vector Machines are a foundational tool in machine learning, offering robust performance for both classification and regression tasks, especially in complex and high-dimensional datasets. Their ability to leverage the kernel trick makes them particularly powerful for non-linear problems.

Further Reading

1. Introduction to Support Vector Machines (SVM) – GeeksforGeeks

2. https://course.ccs.neu.edu/cs5100f11/resources/jakkula.pdf

3. Support Vector Machine (SVM) Algorithm – GeeksforGeeks

4. Support Vector Machine — Introduction to Machine Learning Algorithms | by Rohith Gandhi | Towards Data Science

5. All You Need to Know About Support Vector Machines

Description:

Classification and regression tasks by finding the optimal hyperplane

IoT Scenes:

Data classification, anomaly detection, and pattern recognition.

Data Classification: Classifying data from sensors into categories (e.g., normal vs. faulty).

Anomaly Detection: Detecting outliers and unusual patterns in sensor data.

Pattern Recognition: Identifying and classifying patterns in IoT data.

Predictive Modeling: Making predictions based on historical data.