TensorFlow Lite is a lightweight version of TensorFlow designed specifically for mobile and embedded devices, enabling efficient on-device machine learning. It allows developers to run machine learning models on various platforms, including Android, iOS, and microcontrollers, without relying on server-based architectures.

Key Features

-

Optimized for On-Device Learning: TensorFlow Lite addresses critical constraints such as latency, privacy, connectivity, model size, and power consumption, making it suitable for devices with limited resources. This optimization ensures that models can run quickly and efficiently without needing a constant internet connection.

-

Multiple Platform Support: It supports a wide range of platforms, including Android, iOS, embedded Linux, and microcontrollers, allowing developers to deploy models across different environments seamlessly.

-

Diverse Language Support: TensorFlow Lite supports multiple programming languages, including Java, Swift, Objective-C, C++, and Python, making it accessible to a broad audience of developers.

-

High Performance: The framework includes features for hardware acceleration and model optimization, which enhance the performance of machine learning applications on mobile devices.

-

End-to-End Examples: TensorFlow Lite provides a variety of examples for common tasks like image classification, object detection, and speech recognition, facilitating easier implementation for developers.

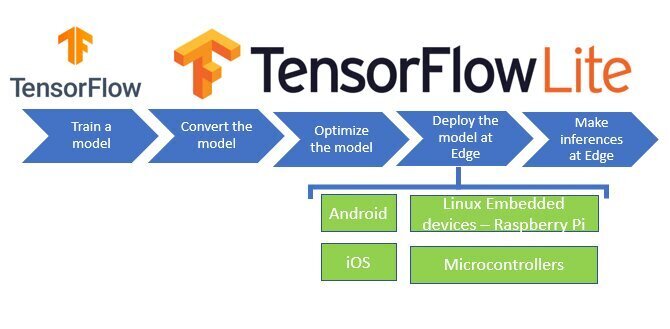

Development Workflow

The development process with TensorFlow Lite typically involves three main steps:

-

Model Generation: Developers can create TensorFlow Lite models using existing TensorFlow models or by converting them into the TensorFlow Lite format (.tflite). This conversion process can include optimizations like quantization to reduce model size and improve inference speed.

-

Running Inference: Inference refers to the execution of the model to make predictions. TensorFlow Lite provides APIs for running inference across different platforms and languages, allowing developers to leverage the capabilities of their target devices effectively.

-

Deployment: After testing and optimizing the model, developers can deploy it on mobile or embedded devices, ensuring that it operates efficiently in real-world scenarios.

Use Cases

TensorFlow Lite is widely used in various applications, including:

-

Gesture Recognition: Enabling applications to respond to user gestures for control or interaction.

-

Image Recognition: Allowing devices to identify and analyze images captured through cameras.

-

Speech Recognition: Facilitating voice-driven interfaces in applications like virtual assistants.

Conclusion

TensorFlow Lite stands out as a powerful tool for developers looking to implement machine learning on mobile and embedded devices. Its lightweight architecture, combined with robust performance and extensive support for various platforms and languages, makes it an ideal choice for creating intelligent applications that operate effectively in resource-constrained environments[1][2][4].

Further Reading

1. TensorFlow Lite

2. Introduction to TensorFlow Lite – GeeksforGeeks

3. Learn the Latest Tech Skills; Advance Your Career | Udacity

4. A Basic Introduction to TensorFlow Lite | by Renu Khandelwal | Towards Data Science

5. 开始使用TensorFlow | TensorFlow中文官网

Description:

A lightweight version of TensorFlow optimized for mobile and edge devices.

IoT Scenes:

Edge AI, Mobile applications, Real-time processing, Smart sensors

IoT Feasibility:

High: Specifically designed for resource-constrained devices, making it ideal for IoT.