Tiny YOLO (You Only Look Once) is a streamlined version of the YOLO object detection model, designed to perform real-time object detection with reduced computational requirements. This makes it particularly suitable for applications on devices with limited processing power.

Tiny YOLO Variants

Tiny YOLO v2

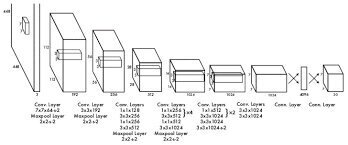

Tiny YOLO v2 is an early simplified version of the YOLO architecture. It consists of several convolutional layers with leaky ReLU activation functions, followed by pooling layers that downscale the image. This version uses a grid-based approach where each grid cell predicts bounding boxes and class probabilities for objects within the cell. The model is designed to balance speed and accuracy, making it suitable for real-time applications[4].

Tiny YOLO v3

Tiny YOLO v3 further improves upon its predecessor by using the COCO dataset for training, allowing it to detect up to 80 different classes. This model divides the input image into 13×13 and 26×26 grid cells, with each cell predicting multiple bounding boxes and class scores. The network uses fewer layers and anchor boxes compared to the full YOLO v3 model, which helps in achieving faster inference times while maintaining reasonable accuracy[2][5].

Tiny YOLO v4

Tiny YOLO v4 is an even more optimized version, designed to run efficiently on hardware with limited computational resources. It features a compressed CSP (Cross Stage Partial) backbone with 29 convolutional layers and only two YOLO layers instead of three. This model achieves competitive results with a mean Average Precision (mAP) of 40% on the MS COCO dataset, while maintaining an inference speed of 3 milliseconds on a Tesla P100 GPU[3].

Key Features

- Real-Time Detection: All Tiny YOLO variants are designed for real-time object detection, making them suitable for applications like surveillance, autonomous driving, and robotics.

- Reduced Complexity: These models have fewer convolutional layers and parameters compared to their full-sized counterparts, which reduces the computational load and allows them to run on devices with limited processing power.

- High Efficiency: Despite their reduced size, Tiny YOLO models maintain a balance between speed and accuracy, making them practical for various real-world applications.

Implementation and Usage

Tiny YOLO models can be implemented using various deep learning frameworks such as TensorFlow, PyTorch, and OpenVINO. For instance, the Tiny YOLO v3 model can be converted from Darknet weights to TensorFlow format using tools like keras2onnx, and then compiled to an Intermediate Representation (IR) for use with the OpenVINO Inference Engine[2][5].

Example code for setting up Tiny YOLO with Luxonis DepthAI:

bash

git clone https://github.com/luxonis/depthai-python.git

cd depthai-python/examples

python3 install_requirements.py

This setup allows for running YOLO on RGB input frames and displaying both the RGB preview and metadata results from the YOLO model[1].

By leveraging the strengths of Tiny YOLO models, developers can deploy efficient and effective object detection systems across a wide range of applications.

Further Reading

1. Tiny Yolo

2. tiny_yolo_v3 | ncappzoo

3. YOLOv4 Tiny Object Detection Model: What is, How to Use

4. Flaport.net | Building Tiny YOLO from scratch using PyTorch

5. open_model_zoo/models/public/yolo-v3-tiny-onnx/README.md at master · openvinotoolkit/open_model_zoo · GitHub