Transformer Models: Revolutionizing Natural Language Processing

Transformer models have emerged as a groundbreaking architecture in the field of natural language processing (NLP) and machine learning. Introduced in 2017 by Vaswani et al. in their seminal paper “Attention Is All You Need,” these models have quickly become the foundation for numerous state-of-the-art language models and applications[1].

At their core, transformer models utilize a novel mechanism called self-attention, which allows them to process sequential data more effectively than previous architectures like recurrent neural networks (RNNs) and convolutional neural networks (CNNs)[1]. This self-attention mechanism enables the model to weigh the importance of different parts of the input sequence when generating outputs, capturing long-range dependencies and contextual information with remarkable efficiency[3].

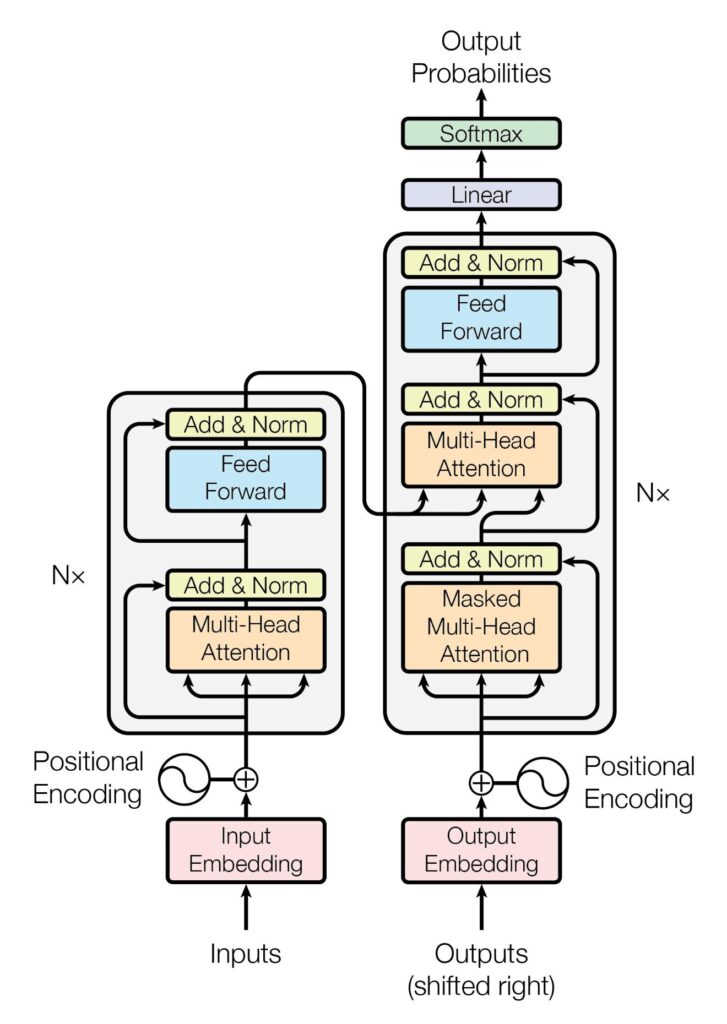

The transformer architecture consists of two main components:

- Encoder: Processes the input sequence and captures relationships between tokens.

- Decoder: Generates the output sequence based on the encoded information.

Both components are composed of multiple layers of self-attention and feed-forward neural networks[1].

One of the key advantages of transformer models is their ability to parallelize computations, allowing for faster training on modern hardware compared to sequential models like RNNs[1]. This has enabled the development of increasingly large and powerful language models, such as BERT, GPT, and T5, which have achieved unprecedented performance on a wide range of NLP tasks[2].

Transformer models have found applications in various domains, including:

- Machine translation

- Text summarization

- Sentiment analysis

- Question answering

- Image captioning

- Speech recognition[1][3]

The impact of transformer models extends beyond NLP. In 2020, researchers demonstrated that transformer-based architectures could outperform traditional approaches in computer vision and speech processing tasks[5].

As the field continues to evolve, researchers are exploring ways to make transformer models more efficient, interpretable, and capable of handling even larger datasets[4]. The ongoing development of transformer architectures promises to drive further advancements in AI and machine learning, potentially bringing us closer to more general and versatile artificial intelligence systems[4].

[1]: https://www.algolia.com/blog/ai/an-introduction-to-transformer-models-in-neural-networks-and-machine-learning/

[2]: https://arxiv.org/abs/2302.07730

[3]: https://towardsdatascience.com/transformers-in-depth-part-1-introduction-to-transformer-models-in-5-minutes-ad25da6d3cca?gi=52ff131c9d10

[4]: https://blogs.nvidia.com/blog/what-is-a-transformer-model/

[5]: https://en.wikipedia.org/wiki/Transformer_%28deep_learning_architecture%29

Further Reading

1. An introduction to transformer models | Algolia

2. [2302.07730] Transformer models: an introduction and catalog

3. Transformers in depth – Part 1. Introduction to Transformer models in 5 minutes | by Gabriel Furnieles | Towards Data Science

4. What Is a Transformer Model? | NVIDIA Blogs

5. Transformer (deep learning architecture) – Wikipedia