In the past couple of days, what was supposed to be a grand robot expo ended up being mercilessly mocked as a “Wax Museum.” After scrolling through the videos and photos posted in WRC2024, I couldn’t help but think it looked more like a “Cyber Ghost Story” or a “Uncanny Valley Carnival.” The term “robot” doesn’t necessarily mean it has to resemble a human, but when it comes to sex robots, most people would probably agree that the more human-like, the better—or so they wish.

Remember when they said we’d have sex robots by 2024? Yeah, where are they? Seriously, where are they?

Some are still eagerly making their own predictions—like a countdown to when sex robots will finally “make their way into every household”:

In 2014, the Pew Research Center predicted that robotic sex partners would become commonplace within a decade.

In 2015, a UK media outlet claimed that by around 2025, humans would be capable of establishing sexual relationships with machines.

In 2016, a PR firm’s trend-forecasting agency boldly claimed that within ten years, sex robots would become accessible to the middle-to-upper-income crowd.

2014 to 2016 was the last time AI and robots were hyped up to the skies, much like today. And yet, ten years on, where’s the promised sex robot? (Not that I’m impatient! Who’s impatient?!)

One of my most anticipated technological advancements | Giphy

A $10,000 Tender Embrace

Most robots don’t need to be obsessed with “being human.” The robotic arms in factories—designed like crab claws—are already much better than humans at tightening screws and moving heavy objects.

Service robots running around malls and hotels are affectionately known as “faceless bedside tables on wheels with an iPad slapped on.” In such environments, wheels are far more stable and faster than two legs.

As for today’s most common “robots,” you can spot them in the streets—like Tesla or those from NIO and Xpeng. Unless they can transform, they’ve got nothing to do with humanoids.

But there’s one type of robot that absolutely must resemble a human: the sex robot. The holy grail of humanoid robotics, sex robots must mimic human physiology to the point where every move, expression, and touch doesn’t trigger the “uncanny valley” effect. They need to fulfill emotional desires and max out every technical spec of a robot “person.”

Take a look at the most advanced sex doll website, and you’ll find a model called “Novax” (robots, of course, only come in female versions). The base version is priced at $7,199.99.

Novax features a modular design in case you ever get bored of her looks or body. Her neck, eyes, mouth, and eyebrows can all make “limited” movements. This company started out making anatomical models and adult dolls, so Novax’s appearance is quite “realistic,” with a highly lifelike face. Users can even kiss her—but be careful of potential liquid damage since most of her chips and wiring are in the back of her head.

Want sensors embedded in the robot’s nether regions? That’s extra. These sensors can simulate human touch, detect the user’s movements, and trigger increasingly excited responses—but only in the form of sounds, for now.

If you’re thinking of customizing some “special features” in her face, body, or organs, be ready to shell out another $5,000.

Even with all these advancements, these dolls still can’t be strictly called “robots.” But compared to products from a few years ago, they do offer more complex interactions and a better user experience.

The first-gen Roxxxy, uh… | Screenshot from official promotional material

For instance, the sex dolls from the American brand Roxxxy once featured both male and female versions. These dolls only had a hinged metal skeleton inside, allowing users to manually pose and fix them in various positions… At the time, they sold for nearly $10,000.

If you’re genuinely moved by Novax and think she’s worth every penny, bringing your robotic partner home might just be the start of your problems…

Love’s Pose and Predicament

One lonely night, when you can’t sleep, you decide to cuddle up with your robotic partner—“Sweetheart, strike a pose~”—but suddenly, her body stops responding. Like a jammed washing machine, she freezes mid-motion, stuck in a creepy, contorted pose while emitting a frustrating beep.

Infuriated, you call customer support, “You said she could switch poses seamlessly when I bought her!” The customer service rep explains, “Well, it’s just like with people, you know? As they age, their bodies don’t move as smoothly. The robot’s joints just can’t compare to a human’s.”

Take Tesla’s humanoid robot, “Optimus,” for example. It has 40 actuators (28 in the torso and 12 in the hands), allowing for over 200 degrees of freedom throughout its body and 11 degrees of freedom in its hands. By contrast, a human has about 350 joints, if you define joints as where two bones meet.

I always thought it would need to be this flexible

For a robot to perform precise movements, it needs motion control algorithms to plan the trajectory of each “joint” and set control parameters (like force, angle, speed, etc.).

Some advanced control strategies aim to improve the robot’s autonomy and environmental awareness. For instance, Model Predictive Control (MPC) solves a sequence of actions to achieve the optimal state in a given future time frame, then determines the current action. For example, slowing down when spotting water on a slope or taking a few preparatory steps before a jump to make the transition smoother.

But before all that, the robot needs to have an extensive motion library and a map describing its movement routes.

Unparalleled dexterity | Giph

If you’re getting the picture, in terms of “intelligence,” robots like “Atlas” from Boston Dynamics are still just programmed machines, much like single-task assembly line tools in a factory. The improvements in environmental perception and motion control algorithms make their “performances” seem spontaneous, but they’re far from truly intelligent.

It’s like your sex robot has only been “trained” to perform a princess carry. One day, you coyly ask it to “lift me up~.” Never mind whether its “hands” might crush your ribs; you should be more concerned that it might slam your head into the ceiling.

Just imagine if that happened in bed… | Giphy

The issue is that traditional robot motion control methods lack generalization. Before a robot can operate in a new, open environment, it needs an accurate model of that environment.

Breaking down the various parts of today’s humanoid robots, you can roughly draw parallels to the human body: the “body” refers to the robot’s physical structure (configuration, materials, etc.) and the hardware modules responsible for each function.

The “cerebellum” refers to the motion control system, which takes commands from the brain and controls the robot’s movements.

The “brain” is responsible for reasoning, planning, decision-making, and environmental perception and interaction—though with today’s level of technology, a robot’s body is more advanced than its brain. Hardware is ahead of intelligence.

Foreplay, Maybe

Human definitions of robot “intelligence” are vague and varied. But almost everyone gets sentimental about one thing: they expect a robot living with them to recognize them, to know it’s them and not mistake them for someone else. If it makes a mistake, it’s like a human partner mumbling someone else’s name in their sleep.

In the past, for a robot to know you’re you, it needed to be shown photos of you from various angles, under different lighting conditions, dressed, undressed, with long hair, short hair, with makeup, and without…

With large language models as its “brain,” a robot can learn general patterns from past training data and apply that knowledge to new problems, becoming adept at analogical reasoning. Advances in natural language understanding and logical reasoning are changing the way robots handle human-machine interaction and decision-making (also the key to shedding their reputation as glorified imbeciles).

When a robot hears the command, “Get me an apple from the fridge,” it typically follows four steps: define the task; break down the task into actions (approach the fridge, open the door, grab the apple, close the door, bring it to you, hand you the apple); retrieve machine-readable symbolic commands for each step; and execute. In the past, robots could only do the final step, while the earlier ones were all pre-programmed by human engineers. But now, robots can directly process natural language commands, breaking them down into logical steps if they’re complex, and then solving them step by step.

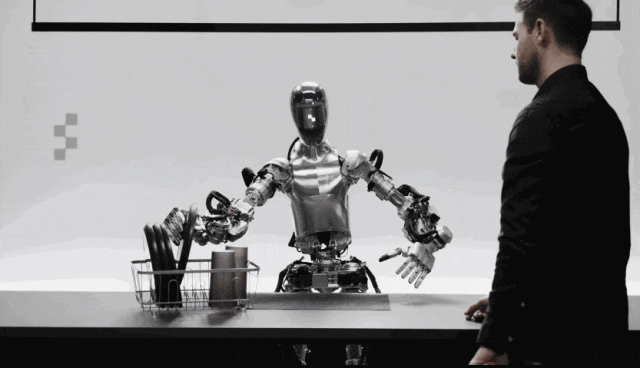

Figure’s impressive performance is thanks to multimodal AI | Screenshot from the official video

A tester once told a Figure AI robot, “Can you put these away over there?” while pointing to a storage bin on the counter. The robot “got it” instantly and even knew to stack washed cups upside down and plates vertically—handling vague references and abstract language just like humans.

So, another lonely night. You bring out your robotic partner and ask, “Darling~ Do you love me?” It replies, “According to Sternberg’s Triangular Theory of Love, love consists of three components: intimacy, passion, and commitment