VGGNet: A Milestone in Deep Learning for Computer Vision

VGGNet is a groundbreaking convolutional neural network (CNN) architecture developed by the Visual Geometry Group at the University of Oxford[1][2]. Introduced in 2014, it significantly advanced the field of computer vision with its deep and uniform structure[5].

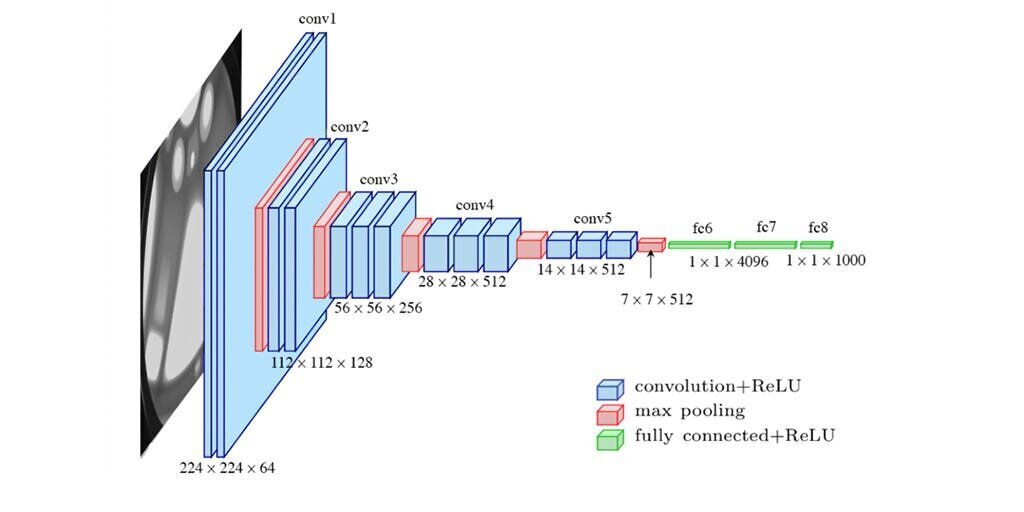

The VGG architecture is characterized by:

- Increased depth, with VGG-16 and VGG-19 containing 16 and 19 layers respectively[1][2]

- Consistent use of small 3×3 convolutional filters throughout the network[4]

- Simplicity and uniformity in design, making it easy to implement and understand[1][5]

VGGNet’s key components include:

- Convolutional layers with ReLU activation

- Max pooling layers

- Fully connected layers at the end of the network[4][5]

The architecture’s depth allows for learning complex hierarchical representations of visual features, resulting in robust and accurate predictions[2]. VGG-16, for instance, achieved an impressive 92.7% top-5 test accuracy on the ImageNet dataset[2].

Despite its simplicity compared to more recent models, VGGNet remains popular due to its:

- Strong performance in image classification and object recognition tasks[2][4]

- Versatility and applicability to various computer vision problems[1][2]

However, VGGNet does have some limitations:

- Large model size (e.g., VGG-16 is over 533MB)[1]

- High computational requirements for training[2]

- Potential for exploding gradients due to its depth[2]

Nonetheless, VGGNet’s impact on the field of deep learning for computer vision is undeniable. It has served as a foundation for numerous subsequent architectures and continues to be a valuable tool for researchers and practitioners in the field[1][4][5].

Further Reading

1. Very Deep Convolutional Networks (VGG) Essential Guide – viso.ai

2. https://www.geeksforgeeks.org/vgg-16-cnn-model/

3. VGGNet-16 Architecture: A Complete Guide | Kaggle

4. VGG Explained | Papers With Code

5. VGG-Net Architecture Explained – GeeksforGeeks