In a groundbreaking step forward for both neuroscience and artificial intelligence, scientists have developed a virtual brain network capable of predicting the behavior of individual neurons in a living brain. This innovative model, derived from the visual system of a fruit fly, offers researchers a powerful tool to simulate and test hypotheses rapidly on a computer, significantly reducing the time and resources typically required for experimental work with live specimens.

Srini Turaga, a group leader at the Janelia Research Campus—a division of the Howard Hughes Medical Institute (HHMI)—explains, “Now we can start with a guess for how the fly brain might work before anyone has to make an experimental measurement.” This new approach, detailed in the journal Nature, hints at a future where energy-intensive AI systems, like ChatGPT, could operate far more efficiently by adopting some of the computational strategies employed by the biological brains they seek to emulate.

The allure of the fruit fly brain lies in its compact yet highly efficient structure. Jakob Macke, a professor at the University of Tübingen and co-author of the study, marvels at its capabilities: “It’s able to do so many computations. It’s able to fly, it’s able to walk, it’s able to detect predators, it’s able to mate, it’s able to survive—using just 100,000 neurons.” In stark contrast, current AI systems rely on computers with billions of transistors and consume as much power globally as a small nation.

“When we think about AI right now, the leading charge is to make these systems more power efficient,” notes Ben Cowley, a computational neuroscientist at Cold Spring Harbor Laboratory, who was not involved in the study. Leveraging insights from the fruit fly brain could be a pivotal step toward achieving this goal.

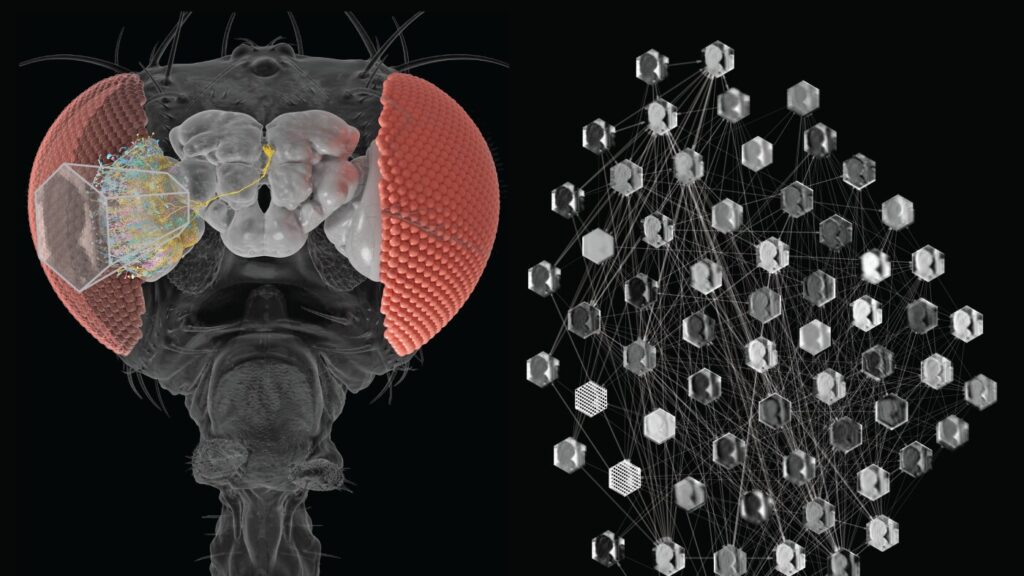

The creation of this virtual brain network is the culmination of over a decade of meticulous research into the fruit fly brain’s architecture. Much of this work, spearheaded and funded by HHMI, has resulted in detailed maps of every neuron and connection within the insect’s brain. This groundwork enabled Turaga, Macke, and PhD candidate Janne Lappalainen to craft a computer model mirroring the fly’s visual system, which constitutes a significant portion of its neural circuitry.

Starting with the fly’s connectome—a comprehensive map of neural connections—the team established a foundation for understanding potential information pathways. However, as Macke points out, “That tells you how information could flow from A to B, but it doesn’t tell you which [route] is actually taken by the system.” Scientists can glimpse the process in living flies, but capturing real-time activity across thousands of neurons remains elusive.

Therefore, the team aimed to simulate a brain, or at least a portion of it, on a computer. They began by developing virtual representations of 64 neuron types, interconnected as they would be in a fly’s visual system. These networks were then exposed to video clips depicting various motion types.

An AI system analyzed the neuron activities as the clips played, ultimately yielding a model that could predict the responses of every neuron within the artificial network. Interestingly, this model also accurately forecasted the neurons’ reactions in real flies that had previously viewed the same videos in other studies.

Although the paper outlining this model has only recently been published, the model itself has been available for over a year and has caught the attention of brain scientists. “I’m using this model in my own work,” Cowley shares, noting its utility in determining the viability of experimental ideas before testing them on animals.

Looking forward, future iterations of the model are expected to expand beyond the visual system to encompass various brain functions and computational tasks. Macke envisions, “We now have a plan for how to build whole-brain models of brains that do interesting computations.” With this pioneering approach, the intersection of neuroscience and AI stands poised for transformative advancements.