In recent years, the rapid advancement in generative AI has injected unprecedented innovation into various industries. Traditional AI service architectures that rely heavily on large-scale cloud models have faced significant challenges, including high latency, massive bandwidth consumption, and privacy concerns. To address these issues, we propose an innovative edge-cloud collaboration framework combining Pathways and Mixture of Experts (MoE) models. This architecture enhances generative AI service efficiency and user experience through the synergistic operation of large cloud models and smaller edge models. This work provides critical insights for the distributed deployment and application of AI models in wireless mobile networks.

Abstract

Generative Artificial Intelligence (GenAI) has increasingly become a key component of future network services through content generation, providing users with diverse services. However, training and deploying large AI models often come with substantial computational and communication overheads. Moreover, relying on cloud-based generation tasks requires high-performance computing facilities and remote access capabilities, posing significant challenges to centralized AI services. Therefore, a distributed service architecture is urgently needed to migrate part of the tasks from the cloud to the edge, achieving more private, real-time, and personalized user experiences.

Inspired by Pathways and the MoE framework, this paper proposes a bottom-up architecture where Big AI Models (BAIM) in the cloud work synergistically with small edge models. We designed a distributed training framework and task-driven deployment scheme to efficiently deliver native generative AI services. This framework enables intelligent collaboration, enhances system adaptiveness, gathers edge knowledge, and balances load between edge and cloud. A case study on image generation verifies the framework’s effectiveness. Finally, the paper outlines research directions to further leverage edge-cloud collaboration, laying the foundation for local generative AI and BAIM applications.

Introduction

Generative AI technologies have achieved remarkable progress in natural language processing and computer vision in recent years. Text generation models (e.g., GPT) and image generation models (e.g., DALL-E) exhibit impressive capabilities in handling complex generative tasks. However, these models are typically large, requiring substantial computational resources and storage, thus primarily relying on resource-rich cloud environments. This centralized cloud service model, which demands high computational and communication resources, incurs significant costs.

As 6G communication networks evolve from connected intelligence to collaborative intelligence, the cooperation between Big AI Models (BAIM) and smaller edge models has emerged as a new research focus. In future systems, cloud servers will integrate multiple edge models to maintain a unified BAIM. Post-training, BAIM can be decomposed into smaller task-specific models, enabling efficient edge deployment and delivering high-performance, low-latency native generative AI services.

Resolving issues related to adaptability, knowledge acquisition, and the overhead of centralized learning necessitates a scalable BAIM architecture with distributed model training capabilities.

Distributed AI Model Deployment at the Edge

The focus on distributing generative AI models to edge services addresses significant challenges. By migrating data processing closer to the source, latency is significantly reduced, bandwidth usage is minimized, and system efficiency along with user experience is enhanced.

Collaborative Strategies in Edge-Cloud Framework

To enhance both Quality of Experience (QoE) and Quality of Service (QoS) in 6G networks, combining BAIMs with edge services becomes crucial. This paper proposes an integrated framework combining native GenAI and cloud-based BAIM as a potential solution. Initially, we analyze current AI training and deployment strategies in edge-cloud collaboration, reveal their limitations, and summarize challenges restricting BAIM distributed training and local GenAI deployment. Building on this, we propose a bottom-up BAIM architecture, coupled with a distributed training framework and task-oriented deployment scheme. Through an image generation case study, we demonstrate the framework’s efficacy and discuss future research directions to maximize the collaboration between local GenAI and BAIM.

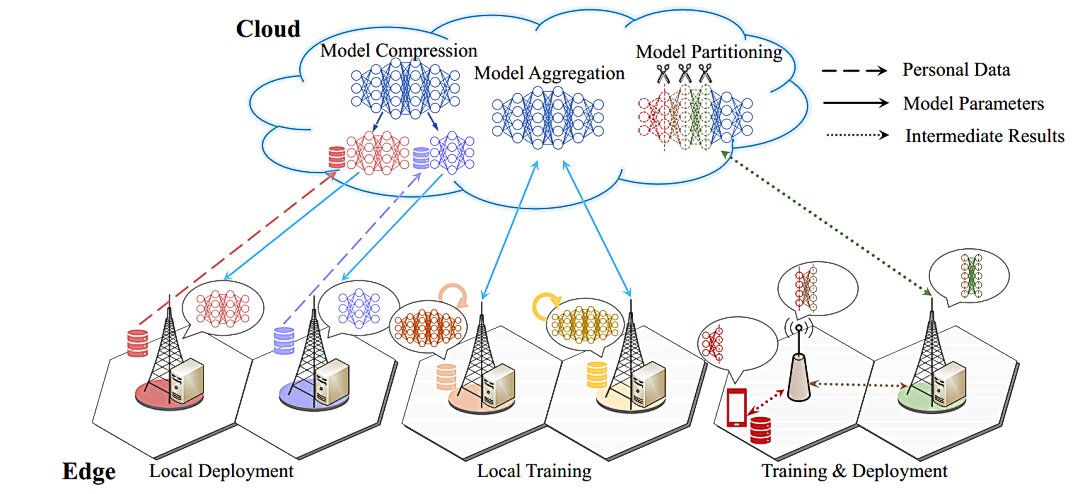

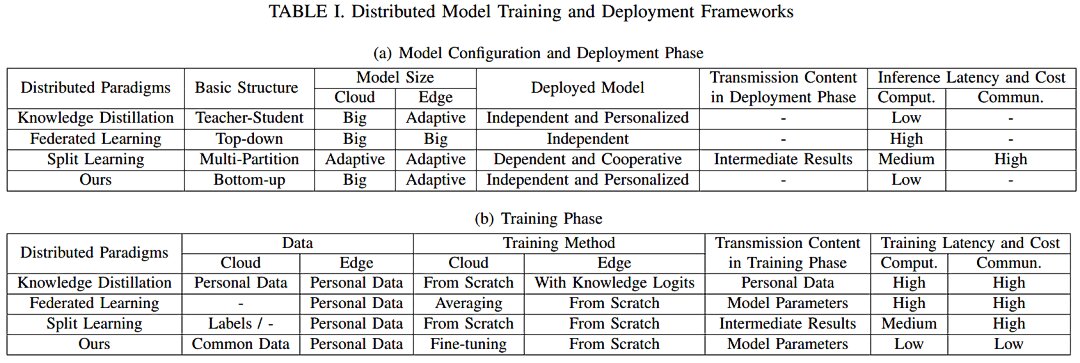

Overview of Model Training and Deployment in Edge-Cloud Collaboration

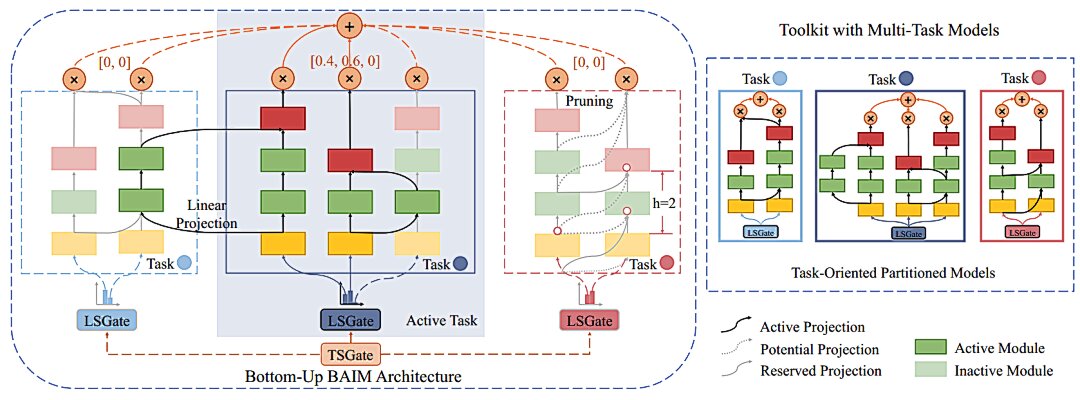

This section outlines the AI model training and deployment framework discussed in 3GPP SA1 Release 18 concerning edge-cloud collaboration. As illustrated in Fig. 1, these distributed AI frameworks include the distribution and sharing of data and models, often adopting techniques like model minimalism and compression (e.g., Knowledge Distillation, KD), model aggregation (e.g., Federated Learning, FL), and model splitting (e.g., Splitting Learning, SL). We compare these frameworks with the proposed bottom-up BAIM architecture in Table 1, highlighting existing frameworks’ limitations and summarizing major challenges hindering BAIM distributed training and deployment.

Fig. 1: Three Types of Frameworks for Training and Deploying AI Models through Edge-Cloud Collaboration

Fig. 2: Radar Chart Comparing the KPIs of Four Distributed Frameworks under Edge-Cloud Networks

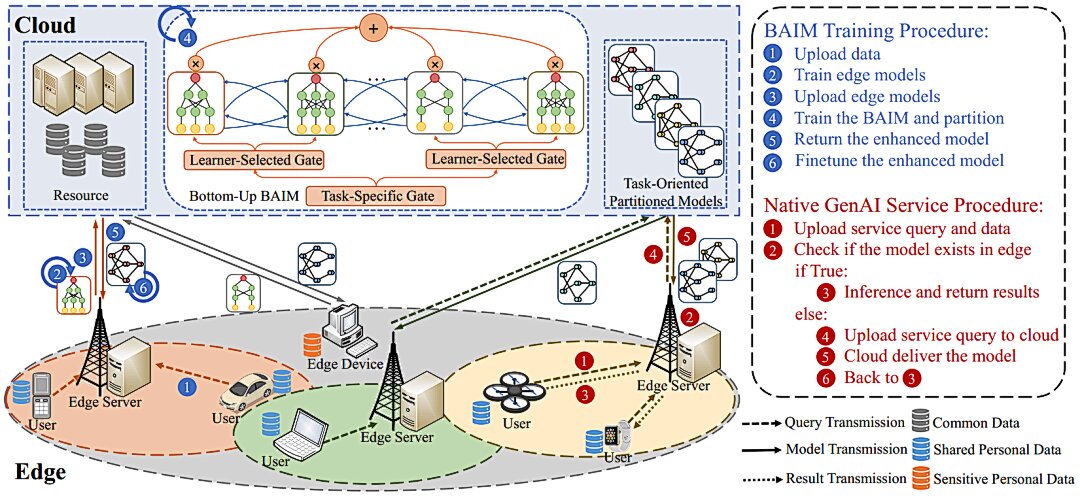

Bottom-Up BAIM Architecture: Distributed Model Training and Task-Oriented Deployment

Given the diversity of nodes and the complexity of multi-task services in 6G networks, a comprehensive bottom-up architecture is necessary. This architecture allows for local model architecture training, enhancing its effectiveness. Compared to top-down approaches, training multiple single-task edge models first and then integrating them into the cloud better meets various user needs.

Within this architecture, collaboration between large cloud models and small edge models ensures efficient handling of both single-task and multi-task scenarios. Edge models can fine-tune for specific tasks based on local data, offering the advantage of single-task specialization. In contrast, the cloud-based BAIM ensures knowledge transfer and multi-task learning capabilities across multiple tasks and edge nodes.

Fig. 3: Workflow of the Proposed Framework and BAIM Training and Local GenAI Service Provision

Fig. 4: Bottom-Up BAIM Architecture and Task-Oriented Model Decomposition Involving Three Tasks

The BAIM architecture includes training strategies like fine-tuning, freezing, and retraining, combining these strategies to maximize model performance. Continuous learning, model pruning, and few-shot learning provide self-maintenance, enabling the framework to adapt to changing tasks and resource conditions efficiently.

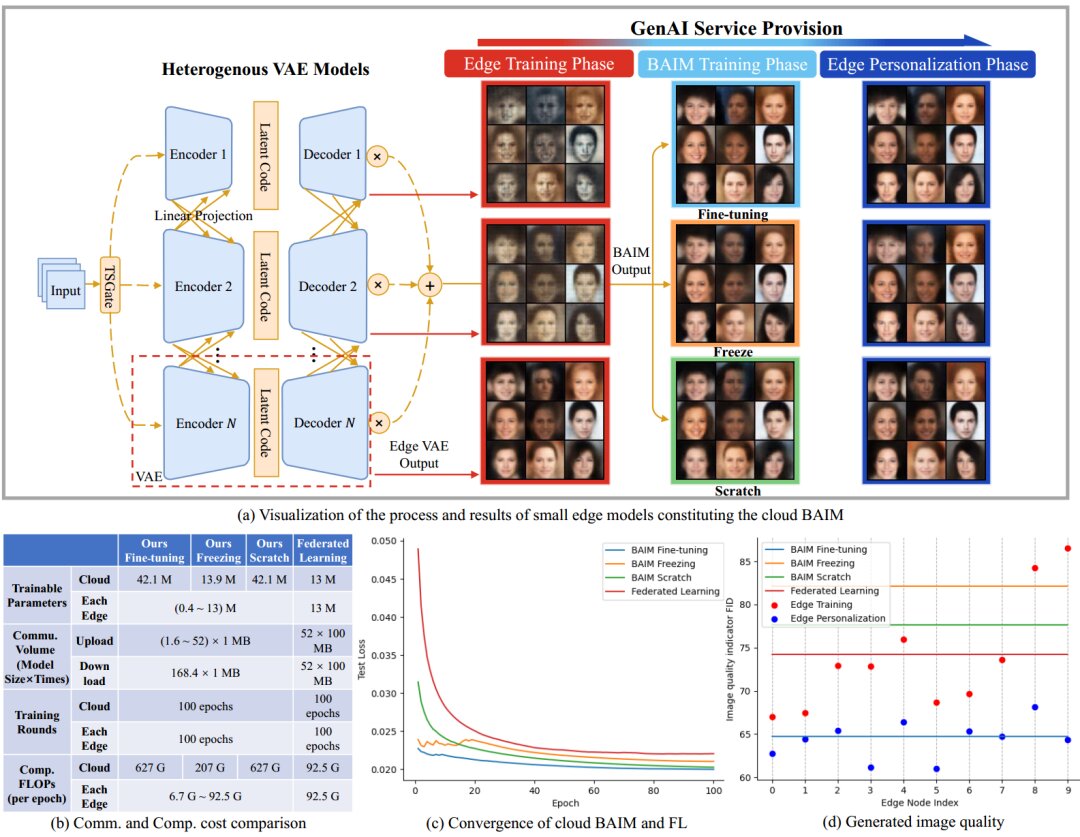

Case Study: Image Generation Service Provision

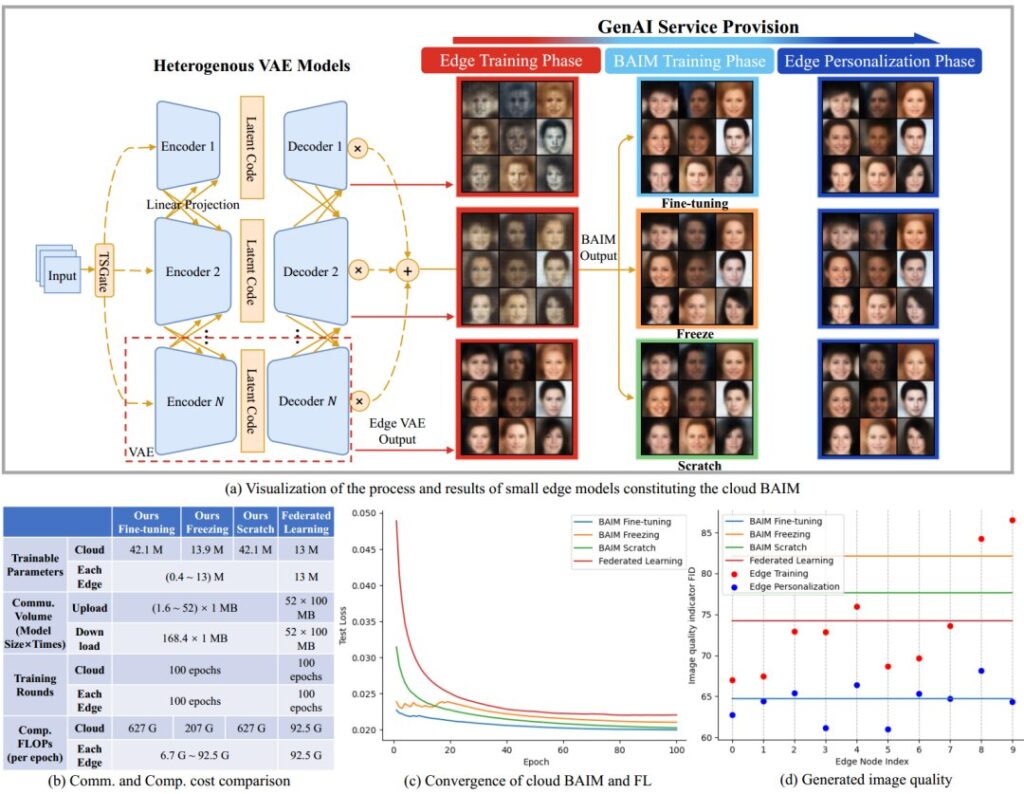

We present a typical image generation service case based on the Variational Autoencoder (VAE) model and compare it with the Federated Learning (FL) framework. All training and evaluation are conducted on the standardized CelebA dataset. For fairness, edge nodes and the cloud train homogeneous models in the FL framework while maintaining separate local data.

Fig. 5: Case Study of Image Generation Service Provision

The results demonstrate a significant reduction in communication burden using our method, though cloud-side computation costs are higher. Nevertheless, the edge nodes’ costs are notably reduced, showcasing the efficiency of workload distribution in edge-cloud collaboration.

Challenges and Future Research Opportunities

Despite the proposed framework’s efficient service capabilities in 6G networks, challenges remain in data management, model fusion, and node management, requiring further research and attention.

Conclusion

The synergy between native GenAI and cloud-based BAIM is pivotal in 6G communication networks, significantly enhancing QoE and QoS. The proposed framework addresses BAIM-related challenges efficiently, demonstrates impressive performance in image generation tasks, and points the way for future research.