In a dramatic reveal, Mark Zuckerberg pulled the new Orion AR glasses from a safe, proudly declaring, “These are the most advanced glasses in the world.” Developed over a decade within Meta and costing an estimated $10,000, these prototype glasses encapsulate remarkable technology innovations worth a closer look.

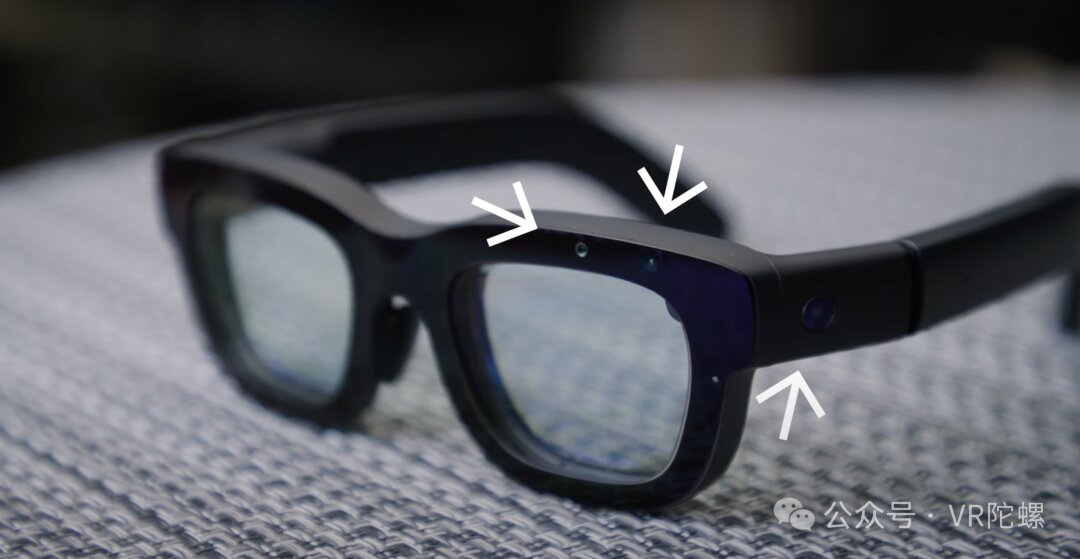

At first glance, Orion’s robust design stands out, with a thicker frame than typical glasses. Despite this, Zuckerberg highlights its weight of less than 100g. The Orion system comprises three components: the AR glasses themselves, an independent computing unit called the Wireless Compute Puck, and an EMG neural wristband.

Crucially, Orion boasts a diagonal field of view of 70 degrees, the widest achieved by using a combination of diffractive waveguide technology and full-color Micro-LED optics, made possible thanks to silicon carbide material. Previously, diffractive waveguides largely relied on glass or resin. While glass provides higher transparency and a refractive index of 2.0-2.2, it limits design flexibility and adds weight. Resin, a popular choice due to its lightweight and anti-fall properties, offers a refractive index range of 1.5-1.7, which restricts field of view expansion.

Silicon carbide emerges as a game-changer. Known for its strong chemical stability and low signal transmission loss, this semiconductor material offers high refractive index values up to 2.6, enabling better light wave guidance and minimizing optical loss. Additionally, its lower density compared to glass suggests a lighter final product, contributing to an enhanced wearing experience.

Apart from its notable waveguide material, Orion integrates a variety of advanced technologies. It supports 6DoF SLAM positioning, gesture recognition, and eye-tracking, enriched further by the EMG neural wristband. Interaction is diverse, ranging from head controls to button inputs on the glasses themselves.

Orion is equipped with seven cameras and sensors, split across the glasses for comprehensive tracking and positioning. Two cameras in the front and at the temple’s sides take care of 6DoF SLAM, while two inside the glasses focus on eye tracking with infrared lights assisting in data capture.

The display experience is adept for indoor settings, though outdoor brightness requires improvement. Meta has integrated electrochromic and photochromic technologies for adapting to complex lighting conditions. Whereas electrochromic materials, like those developed by Boru Yu in China, allow a controllable transition range from 10-80% opacity, photochromic relies on external light cues to rapidly adjust without additional energy input.

The Compute Puck, an oval device without a screen, houses processors and sensors for face modeling required in 3D holographic calls. It supplies computational power, transmitting visuals to the glasses wirelessly, while the wristband connects via Bluetooth.

In addressing gesture tracking, Meta ensures redundancy. The wristband captures some gestures, while glasses cameras handle computer vision-based gesture recognition, guaranteeing interaction even when other methods fail.

Meta’s EMG wristbands harness electromyography (EMG) technology to translate electrical signals from muscle activation into interactive controls. This innovative approach, previously explored in aiding disabled individuals to control prosthetics, now finds application in seamless gesture inputs for Orion.

Orion excels in integrating 6DoF spatial positioning, enhancing MR experiences comparable to those of Meta Quest and Vision Pro but with a notably slimmer and lighter profile. Additionally, Orion users can enjoy cross-platform 3D calls akin to Apple’s Persona on the Messenger app, where virtual 3D Avatars mimic real-time gestures and facial movements in conversations.

Its gaming potential is explored through pixelated shooting games leveraging head and eye tracking for target locking, with hand pinching firing mechanisms. Multi-player experiences like a “ping-pong” application use QR codes for precise spatial setup, enabling interactive gameplay through virtual interfaces.

AI is another major focal point at Meta Connect, highlighted by the introduction of Llama 3.2, Meta’s inaugural open-source multimodal model aimed at becoming the most widely used AI assistant by year end. On Orion, AI demonstrates its prowess through text-to-image creation and contextual object recognition—imagine an AI-driven recipe generator based on visible ingredients, guiding users step-by-step in culinary arts.

Real-time visual processing enhances new Ray-Ban Meta glasses, delivering swift interpretations and translations, a leap from previous models’ post-capture analysis.

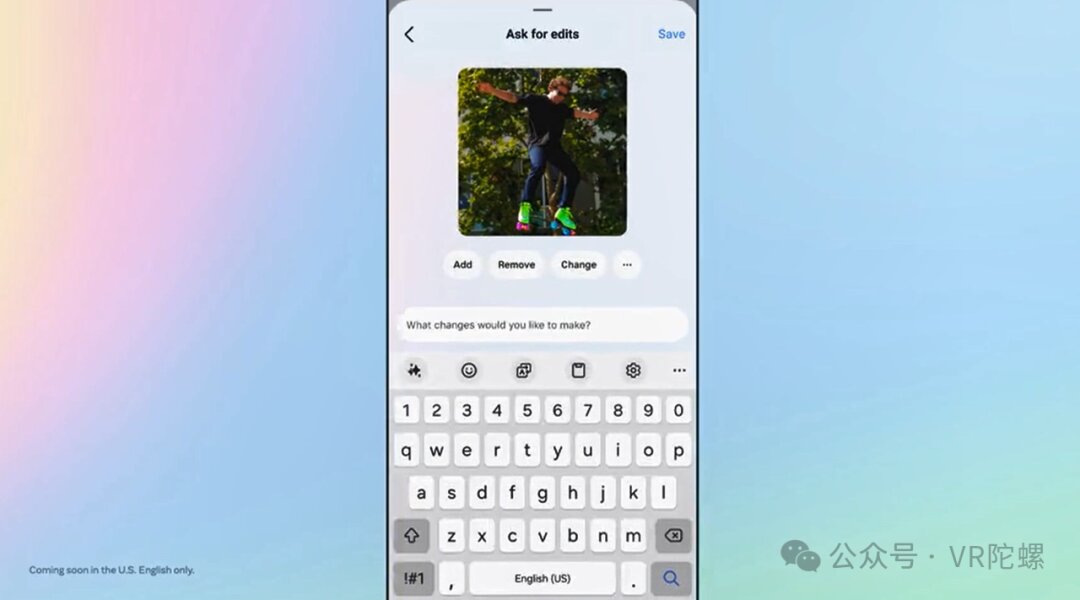

Meta’s AI suite offers robust capabilities, including image editing, voice interactions, and brand new tools like AI Studio for creating lifelike AI avatars, exploring video dubbing through Reels, and a range of Llama 3.2 functions that analyze charts or offer travel suggestions. Despite this, EU regulations currently restrict Llama 3.2 deployment across Europe.

While these AI advancements are not fully embedded in Orion yet, future integration across Meta’s ecosystem appears promising.

As Meta showcases its forward-thinking vision akin to Apple’s Vision Pro display, balancing elements such as display quality, field of vision, weight, size, and power consumption remains a formidable challenge. Orion offers a glimpse into AR’s untapped potential, sparking crucial discussions about future iterations in display improvement, interaction, and AI functionalities. However, widespread adoption still awaits.

In conclusion, while Meta’s experimentation in AR is an impressive endeavor in technological prowess, it remains a journey rather than a final destination.