Subtitles have long been considered an add-on for the modern viewer, serving as an essential tool in noisy environments or for those who multitask during their daily commutes. For individuals who are deaf or hard of hearing, however, subtitles are more than just a convenience—they are a critical accessibility option.

In the blockbuster film “Free Guy,” the protagonist discovers an array of hidden information through special glasses, transforming him from a passive non-player character (NPC) into an aware participant. In reality, a similar technological revolution is occurring with smart glasses that offer “live subtitles,” granting users access to an enriched world of information, although less cinematic but equally impactful.

Overcoming Barriers with Subtitled Vision

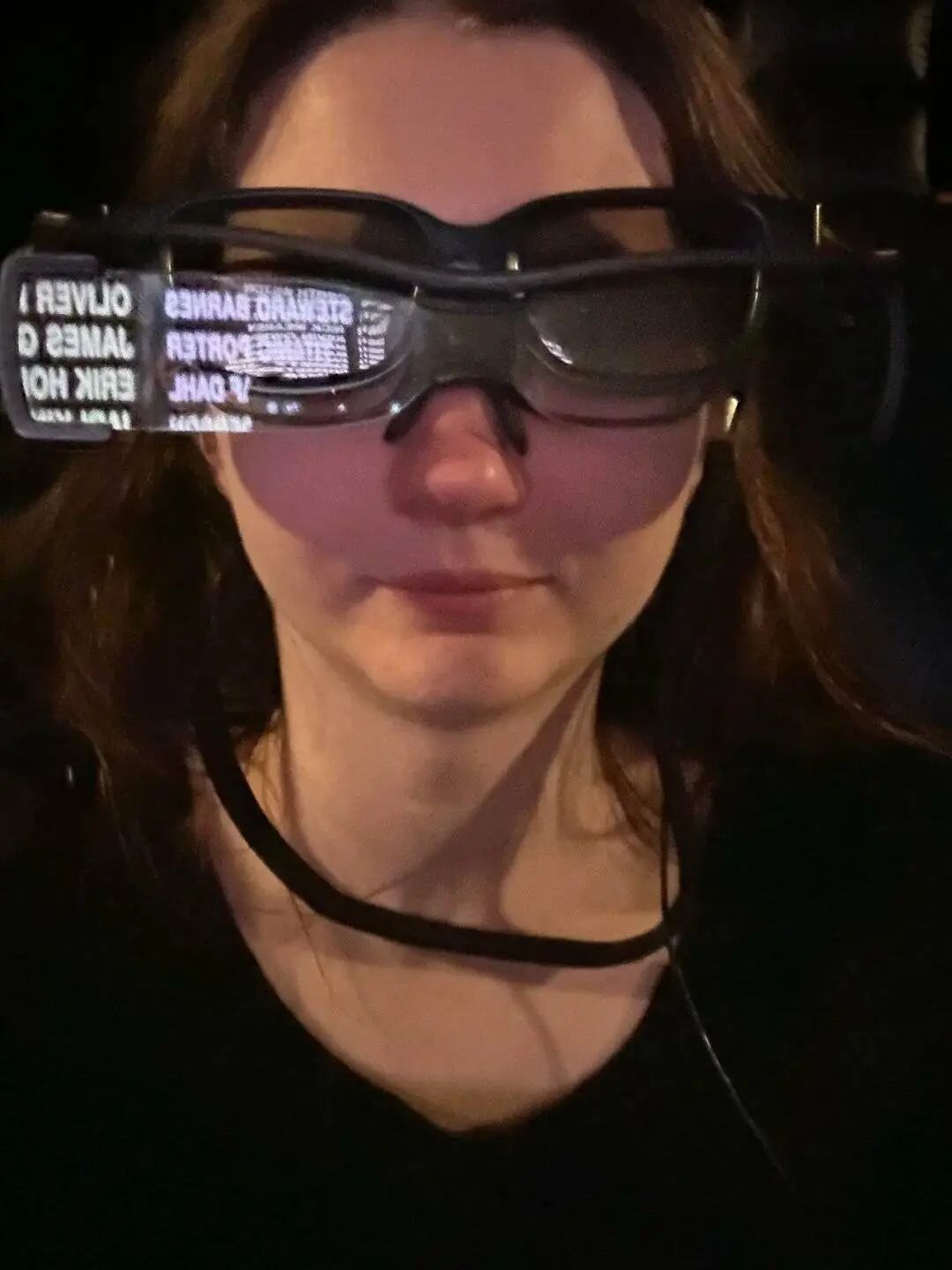

The significance of technology lies in its ability to provide alternatives when traditional paths are blocked. A TikTok video showcasing a hearing-impaired vlogger unboxing a pair of subtitle glasses garnered 800,000 likes, capturing unfiltered emotions of triumph and joy. Holding these glasses, she expressed, through sign language and spoken words, how she had long awaited this technology.

The glasses, known as Hearview, resemble regular spectacles but have the power to “visualize” surrounding sounds, translating inaudible speech into bright, sci-fi-green subtitles. Released in May, Hearview was crafted by a domestic tech company for an international audience, focusing solely on entertainment and practical use cases such as watching movies or game streams.

These glasses not only enable viewing favorites like “Friends” but also facilitate face-to-face interactions, making dining out, shopping, and navigating more accessible for those with hearing impairments.

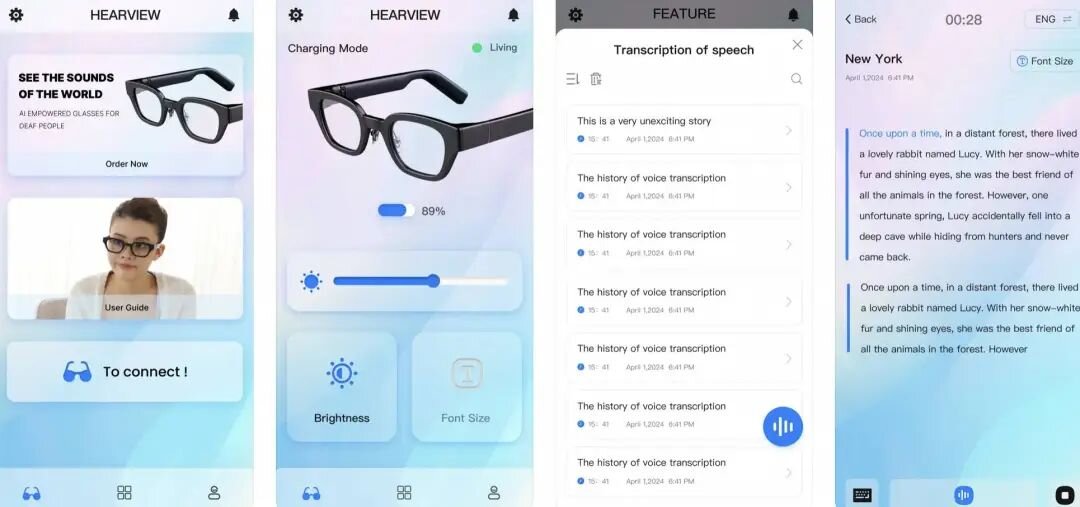

HearView integrates with a mobile app that captures sound through a smartphone’s microphone. An AI algorithm swiftly converts speech to text, which is then projected as subtitles on the glasses. Users can respond by typing messages into the app, which are then converted back into audio. The app also archives subtitles for future reference.

Despite its 95% accuracy claim and a reach of up to 10 meters, users question the effectiveness of HearView in noisy environments. The app strives to filter noise, yet definitive tests in such conditions remain scarce. The gadget’s long-lasting battery life of seven hours and its lightweight, durable design suggest it is ideal for everyday use. However, the steep price of $1,799 is a barrier, making it less accessible to potential users.

HearView only supports English and Spanish, with plans for German and French inclusion. Unfortunately, it does not facilitate cross-language translations. While the bright green text ensures readability, the lack of color options is somewhat limiting.

The Evolution of Subtitle Glasses

Real-time subtitle glasses emerged as a trend two years ago, leading to several interesting but imperfect projects. British AR startup XRAI developed the XRAI Glass app, compatible with multiple AR glasses, to transcribe voice to text. Despite its promise, issues such as discomfort when combined with hearing aids and decreased efficacy amidst background noise have been reported.

AR glasses are also expensive, with prices starting at $300, and additional monthly fees required for apps like XRAI Glass. Nonetheless, such products emphasize the need for continuous improvement and cost reductions.

In China, similar products like Hearview exist, such as the Listen Vision subtitle glasses by Liuliang Technologies, offering hearing aid and translation versions. Despite their capacity for understanding Mandarin, dialects, and foreign languages, user feedback highlights room for expansion, especially in dialect support crucial for older, dialect-speaking users.

An intriguing prototype, Transcribe Glass, designed by students from Yale and Stanford, clips onto existing glasses and connects with an app to allow users to choose their preferred speech-to-text software. At an affordable price of $95, its release date remains unconfirmed, keeping potential users eagerly awaiting its launch.

Overall, multifunctional AR smart glasses also cater to a wider audience, featuring subtitle functionalities. Yet, specialists warn against their use for hearing-impaired individuals due to possible limitations in not exclusively designed devices.

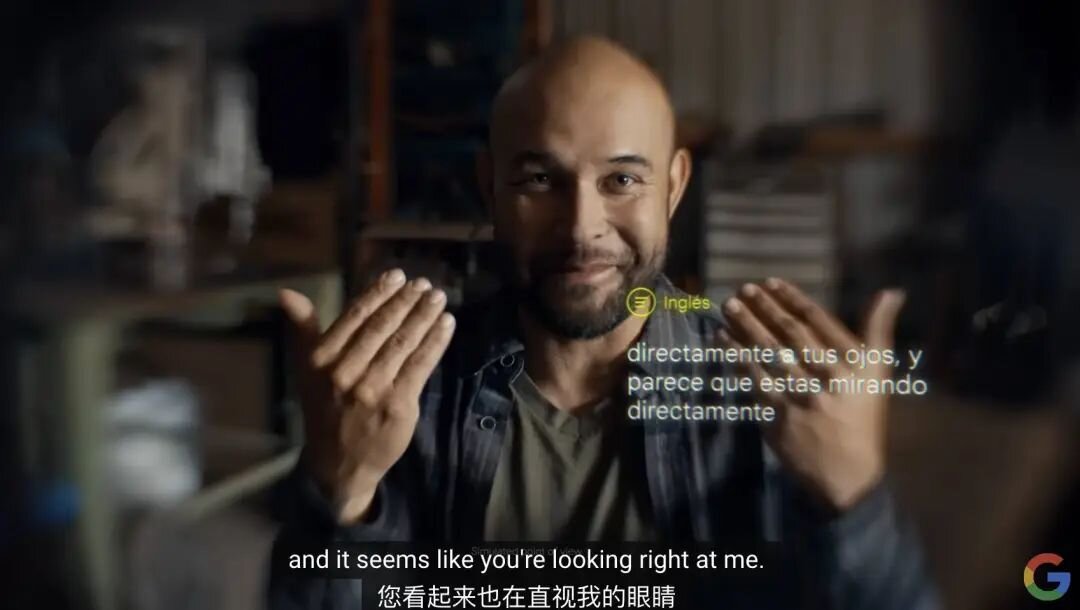

Industry giants like Google hold the potential to lead advancements in this field. Their 2022 AR glasses prototype offered real-time translation across 24 languages, including American Sign Language, although it remains a concept rather than a marketable product.

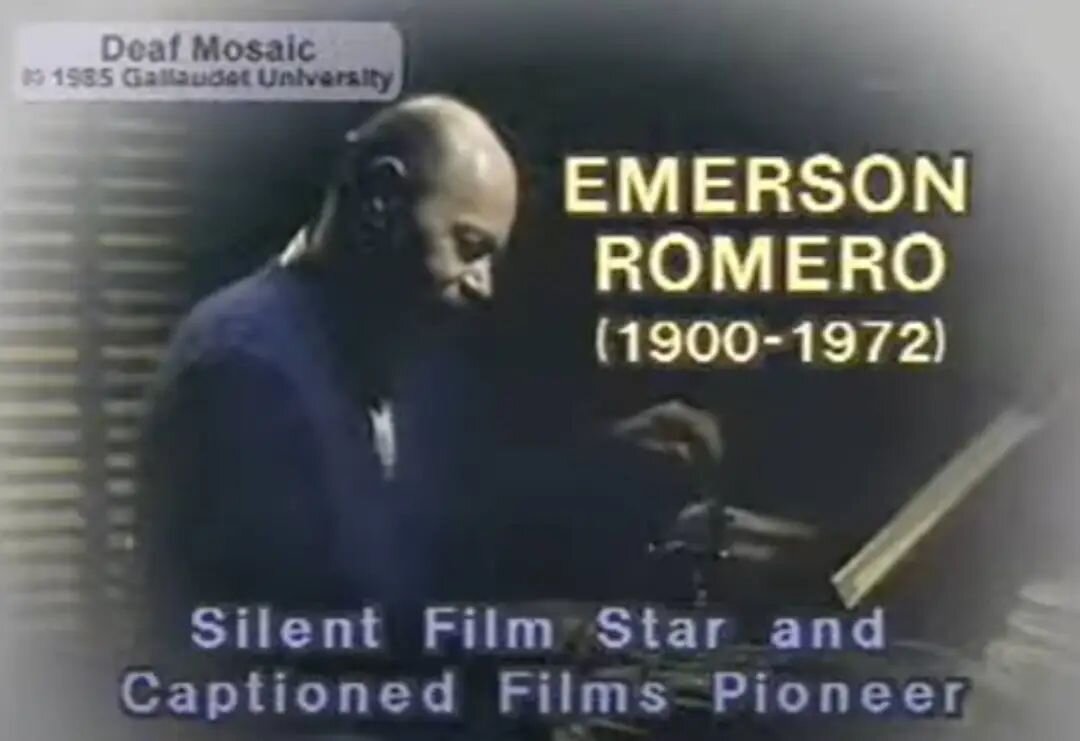

The potential for subtitles to empower communication without conspicuous devices is vast. World Health Organization data highlights the global impact of hearing loss, estimating 466 million people experience hearing difficulties. Subtitles play a vital role in bridging communication gaps, initially pioneered by silent-movie actor Emerson Romero, who introduced them to sound films in 1947.

In the silent film era, audiences could grasp stories through visuals and text inserts. Yet, with the rise of sound films, subtitles became a necessary innovation to ensure accessibility for hearing-impaired audiences.

Legacy and Future Prospects of Subtitle Glasses

Technologies inspired by Romero’s vision continue evolving, extending beyond cinema to include real-time glasses, ensuring inclusivity. Notably, Sony’s CC subtitle glasses, first introduced in 2012, advanced by projecting language and non-verbal cues such as music and sound effects onto screens.

The ability to customize subtitle settings and compatibility with 3D films have elevated the cinema-going experience, circumventing the impracticality of wearing multiple glasses simultaneously.

While current technologies are not without their flaws, they signal a promising future for those with hearing impairments, emphasizing the words of “Parasite” director Bong Joon-ho at the Oscars: “Once you overcome the one-inch tall barrier of subtitles, you will be introduced to so many more amazing films.”

Subtitles facilitate greater inclusivity by allowing hearing-impaired individuals to engage equally in conversations and cultural experiences.

Advanced AR glasses, like Google’s concept, seek to integrate subtitles seamlessly, maintaining eye contact and natural conversation flow. As these devices evolve, their subtle presence remains the ultimate goal—to provide unobtrusive technological communication aids driven by the timeless human need for connection.

With each technological progression, the digital divide narrows, granting more individuals the right to a world enriched by sight and sound.