The launch of Meta’s first true AR glasses, Orion, unveiled in September, marked a significant leap in interactive technology by showcasing a new trio of interactions — eye movements, gestures, and myoelectric wristbands. These innovations have stirred considerable interest, especially the myoelectric wristband, which, though not new, emerges as a possible cornerstone for future AR technology.

Before diving into the specifics of true AR glasses, it’s enlightening to consider the earlier iterations like AI glasses, seen in products such as Ray-Ban Meta. These devices featured basic functionalities, managed through simple touchpads, physical buttons, and voice commands, sufficient for basic AI interactions and media functions. However, the addition of display screens to glasses broadened their capabilities, prompting the need for more sophisticated interaction methods.

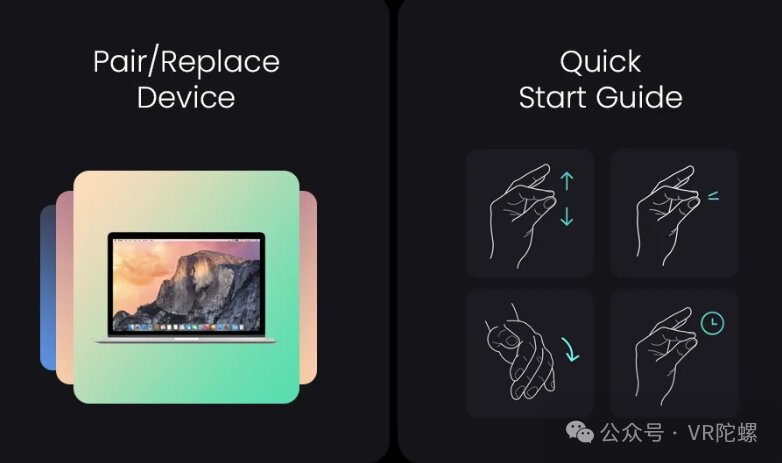

With the advent of genuine AR glasses, the application landscape is poised for explosive growth, necessitating intricate input methods to manage complex scenarios like gaming and virtual workspace. The interaction solution presented by Meta Orion combines eye movement and gesture recognition with the innovation of myoelectric wristbands.

Examining each component, the “eye movement + gestures” model, initially defined by Vision Pro for MR headsets, offers a natural user interface without requiring external controllers, making operations seamless and intuitive. However, this interaction model has limitations, including dependency on visual assessments that can falter under conditions like poor lighting or obstructions and generally higher power consumption.

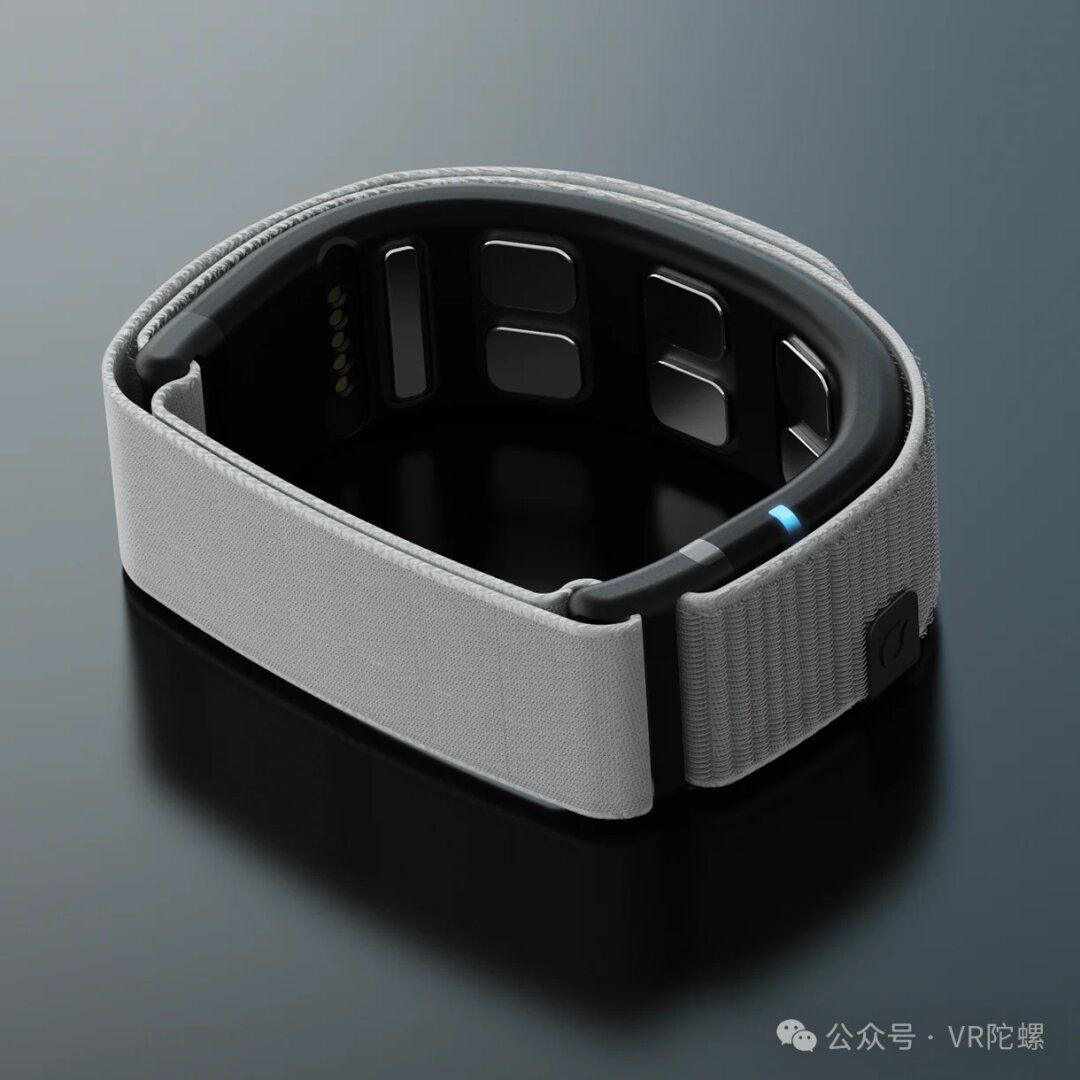

Myoelectric wristbands offer a compelling alternative by capturing electrical signals generated by muscle movements, decoded into actionable intentions, as outlined in technologies like surface electromyography (EMG). This approach surpasses visual-based interactions by capturing subtle finger movements independent of hand position, boasting high accuracy even when hands are in pockets.

The versatility of myoelectric technology in wearables is evident; it is not bound by the constraints of visual environments and offers improved privacy over gesture recognition systems. The current limitations, such as precision below traditional input methods and recognizing only basic gestures, are set for enhancements with AI advancement. Meta, for instance, foresees EMG wristband technology delivering keyboard-like accuracy by 2028.

The history of EMG reaches back to 1666 and has evolved significantly, finding its way into human-machine interfaces in recent decades. Microsoft’s collaboration with the University of Washington in 2008 highlighted EMG’s potential for computer interaction.

The Myo armband, introduced by Canada’s Thalmic Labs in 2014, marked a landmark moment by recognizing a range of physical gestures, paving the way for broader adoption of EMG technology. Although Myo was eventually discontinued, its legacy continues through Thalmic Labs’ rebranding to North, which pivoted to AR development, leading to its acquisition by Google in 2020.

Following this trail, CTRL-labs continued the journey by leveraging Thalmic’s intellectual property, eventually being acquired by Facebook with a focus on enhancing neural interaction.

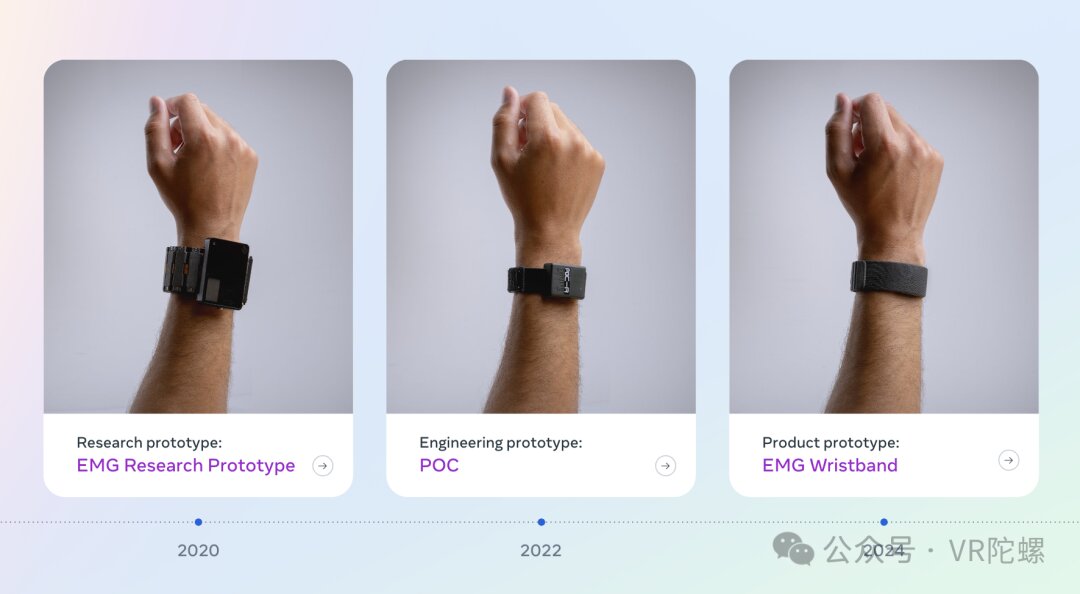

Meta’s ongoing exploration into EMG promises further integration and miniaturization of these devices, potentially setting a precedent for consumer applications by 2025 with plans for an EMG-enabled AR product.

Worldwide, several companies are already pioneering this technology. Israeli firm Wearable Devices introduced its Mudra Band in 2023, compatible with various Apple products, further showcasing the market’s readiness for EMG-like innovations.

The Mudra Band, unlike Meta’s use of EMG, employs surface nerve conduction (SNC) sensors, offering a different privacy level by only reacting to voluntary finger movements, distinguishing itself from traditional EMG which captures a wider array of motions.

Wearable Devices is actively developing products for augmented reality, demonstrated when Lenovo’s ThinkReality A3 glasses integrated their technology at AWE, pointing towards expanding applications in XR environments.

Collaborations such as that between Wearable Devices and China’s ThunderBird Innovations highlight the industry’s movement towards refining and mass-producing next-generation neural interfaces for AR glasses, with potential market breakthroughs expected around 2025.

Despite current challenges, such as cost and user experience, the convergence of myoelectric technology with AR glasses represents a compelling vision for future interactivity. As companies like Meta push the envelope, the realization of neural armband AR interactions could be closer than anticipated, anchoring 2025 as a pivotal year for technological convergence in this innovative domain.