Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) designed to handle the vanishing gradient problem that plagues traditional RNNs. LSTMs excel in capturing long-term dependencies in sequential data, making them highly effective for tasks such as machine translation, speech recognition, and time series forecasting[1][2].

LSTM Architecture

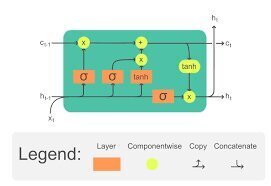

An LSTM network consists of memory cells and three types of gates: input gate, forget gate, and output gate. These components work together to control the flow of information:

- Memory Cell: Stores information over long periods.

- Input Gate: Determines what new information should be stored in the memory cell.

- Forget Gate: Decides which information should be discarded from the memory cell.

- Output Gate: Controls what information is output from the memory cell based on current input and the cell state[1][4][5].

Working Mechanism

The LSTM network maintains a cell state that acts as a conveyor belt, allowing information to flow unchanged. The gates regulate this flow of information:

- Forget Gate: Uses a sigmoid function to decide which parts of the cell state to forget.

- Input Gate: Uses a sigmoid function to decide which values to update and a tanh layer to create new candidate values.

- Output Gate: Uses a sigmoid function to decide which parts of the cell state to output, followed by a tanh function to scale the output[1][2][4].

Applications

LSTMs are widely used in various domains due to their ability to learn long-term dependencies:

- Natural Language Processing (NLP): Machine translation, language modeling, text summarization.

- Speech Recognition: Converting speech to text, command recognition.

- Time Series Prediction: Forecasting future values based on past data.

- Video Analysis: Understanding actions and objects in video frames.

- Handwriting Recognition: Recognizing handwritten text from images[1][4][5].

Advantages

LSTMs address the limitations of traditional RNNs by:

- Overcoming the vanishing gradient problem through constant error flow within memory cells.

- Maintaining long-term dependencies by selectively retaining and discarding information.

- Being versatile in handling various sequential data tasks[1][2][5].

Overall, LSTMs represent a significant advancement in the field of deep learning, providing robust solutions for complex sequence prediction problems.

References

[1] GeeksforGeeks – What is LSTM – Long Short Term Memory?

[2] Machine Learning Mastery – A Gentle Introduction to Long Short-Term Memory Networks by the Experts

[4] Simplilearn – Introduction to Long Short-Term Memory(LSTM)

[5] Wikipedia – Long short-term memory

Further Reading

1. What is LSTM – Long Short Term Memory? – GeeksforGeeks

2. A Gentle Introduction to Long Short-Term Memory Networks by the Experts – MachineLearningMastery.com

3. Understanding LSTM Networks — colah’s blog

4. Introduction to Long Short-Term Memory(LSTM) | Simplilearn

5. Long short-term memory – Wikipedia

Description:

Handling long-term dependencies in sequential data.

IoT Scenes:

Predictive analytics, speech recognition, and complex time-series predictions.

Time-Series Forecasting: Predicting future sensor readings or usage patterns.

Anomaly Detection: Identifying unusual patterns in time-series data from various sensors.

Health Monitoring: Analyzing sequential health data for early detection of conditions.

Supply Chain Management: Forecasting inventory needs and logistics based on historical data.