Clustering algorithms are essential tools in data analysis, particularly in the realm of unsupervised learning. They group similar data points into clusters, allowing for the identification of patterns and relationships within datasets without the need for labeled data.

Types of Clustering Algorithms

1. Centroid-based Clustering (Partitioning Methods)

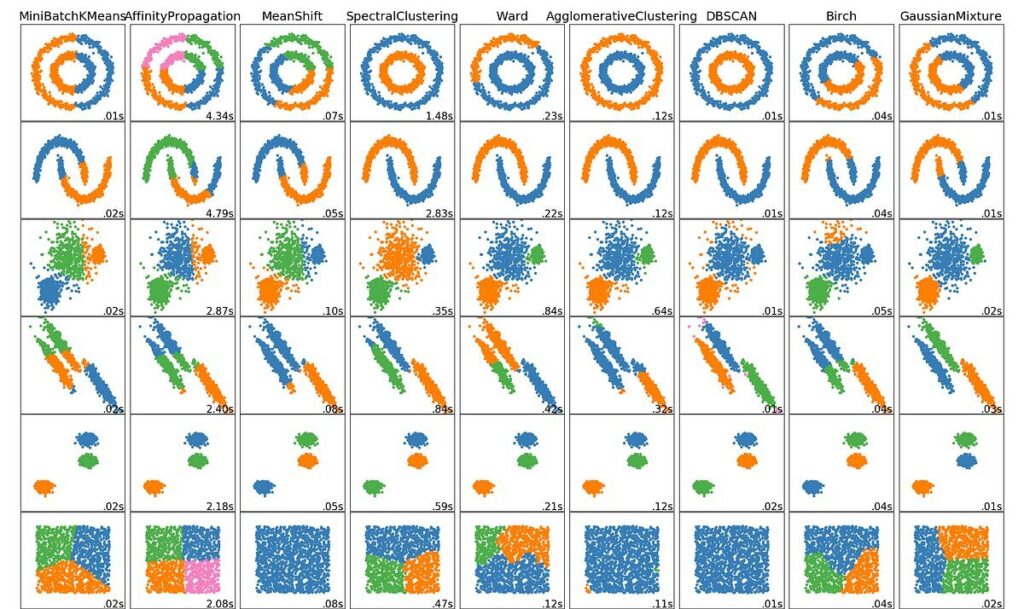

Centroid-based clustering, such as K-means and K-medoids, is one of the simplest and most widely used clustering techniques. It partitions data into a predefined number of clusters based on the proximity of data points to the centroid of each cluster, typically using distance metrics like Euclidean or Manhattan distance. The main limitation of this method is the requirement to specify the number of clusters in advance, which can lead to suboptimal performance if the chosen number does not reflect the underlying data structure[1][3].

2. Density-based Clustering (Model-based Methods)

Density-based clustering methods, such as DBSCAN (Density-Based Spatial Clustering of Applications with Noise) and OPTICS (Ordering Points To Identify the Clustering Structure), group data points based on the density of data in the feature space. These algorithms are particularly effective at identifying clusters of varying shapes and sizes, and they can also recognize outliers as noise. Unlike centroid-based methods, density-based algorithms do not require the number of clusters to be specified beforehand[1][4].

3. Hierarchical Clustering

Hierarchical clustering creates a tree-like structure of clusters, which can be either agglomerative (bottom-up) or divisive (top-down). In agglomerative clustering, each data point starts in its own cluster, and pairs of clusters are merged as one moves up the hierarchy. This method provides a visual representation of data relationships through dendrograms, which can be cut at different levels to obtain varying numbers of clusters. Hierarchical clustering is advantageous for its flexibility in cluster shape and size but can be computationally intensive for large datasets[2][4].

Applications of Clustering

Clustering algorithms have a wide range of applications across various fields:

-

Market Segmentation: Businesses utilize clustering to identify distinct customer segments for targeted marketing strategies.

-

Image Analysis: Clustering aids in grouping similar images or identifying patterns in visual data.

-

Anomaly Detection: In cybersecurity and fraud detection, clustering helps identify unusual patterns that deviate from expected behavior.

-

Social Network Analysis: Clustering algorithms can analyze social networks to identify communities or influential individuals within a network[1][3][5].

In conclusion, clustering algorithms serve as powerful tools for data analysis, enabling the discovery of meaningful patterns in large and complex datasets. Their diverse methodologies cater to various data types and application needs, making them invaluable in fields ranging from marketing to healthcare.

Further Reading

1. Clustering in Machine Learning – GeeksforGeeks

2. Introduction To Clustering Algorithms | Towards Data Science

3. Clustering in Machine Learning: 5 Essential Clustering Algorithms | DataCamp

4. An Overview of Clustering Algorithms | Oxford Protein Informatics Group

5. Overview of Clustering Algorithms | by Srivignesh Rajan | Towards Data Science

Description:

Grouping similar data points into clusters.

IoT Scenes:

Data segmentation, anomaly detection, and pattern recognition.

Data Segmentation: Grouping similar sensor data for analysis and insights.

Anomaly Detection: Identifying outliers and anomalies within clusters of data.

Pattern Recognition: Detecting patterns and trends in large datasets.

Resource Optimization: Clustering data to optimize resource allocation and management.