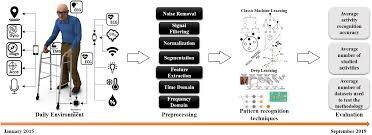

Activity recognition is a crucial area in artificial intelligence, focusing on identifying and classifying human activities using various data sources, such as wearable sensors and video feeds. This field has seen significant advancements through the application of deep learning models, including Convolutional Neural Networks (CNNs), Long Short-Term Memory (LSTM) networks, and Transformer-based models.

CNN for Activity Recognition

Convolutional Neural Networks (CNNs) are widely used for activity recognition due to their ability to automatically extract features from raw data. CNNs are particularly effective in handling spatial data, such as images or sensor data, by applying convolutional filters to capture local patterns. For instance, a study implemented a CNN model to classify human activities using wearable sensors, achieving high accuracy by leveraging the spatial relationships in the data [1].

LSTM for Activity Recognition

Long Short-Term Memory (LSTM) networks are a type of Recurrent Neural Network (RNN) designed to capture temporal dependencies in sequential data. LSTMs are effective for activity recognition tasks that involve time-series data, such as accelerometer readings from wearable devices. They can maintain long-term dependencies and are less prone to the vanishing gradient problem compared to traditional RNNs. An example of this is the use of LSTM networks to estimate the intensity of human activities, which demonstrated superior performance in capturing the temporal dynamics of the data [1].

CNN-LSTM Hybrid Models

Combining CNNs and LSTMs into a hybrid architecture leverages the strengths of both models. The CNN layers first extract spatial features from the input data, which are then fed into LSTM layers to capture temporal dependencies. This approach has been shown to improve activity recognition performance. A novel CNN-LSTM architecture was proposed, which effectively handled the spatial and temporal aspects of wearable sensor data, resulting in enhanced recognition accuracy [1][3].

Transformer-based Models

Transformer models, initially designed for natural language processing tasks, have been adapted for activity recognition due to their ability to handle long-range dependencies and parallelize computations. Transformers use self-attention mechanisms to weigh the importance of different parts of the input data, making them highly effective for sequential data. Research has shown that Transformer-based models can outperform traditional CNN and LSTM models in terms of both speed and accuracy. For example, a study compared Transformer models with CNN and LSTM models for activity recognition and found that Transformers provided better scalability and performance [2][5].

Conclusion

The integration of advanced deep learning models, such as CNNs, LSTMs, and Transformers, has significantly advanced the field of activity recognition. Each model offers unique advantages: CNNs excel in feature extraction from spatial data, LSTMs are powerful for capturing temporal dependencies, and Transformers provide superior performance for sequential data through self-attention mechanisms. Hybrid models that combine these architectures can further enhance recognition accuracy, making them invaluable for applications ranging from healthcare to intelligent surveillance.

References:

– [1] Koşar Enes, Barshan Billur. “A new CNN-LSTM architecture for activity recognition employing wearable motion sensor data: Enabling diverse feature extraction.” ScienceDirect, 2023.

– [2] ResearchGate. “Accuracy comparison of CNN, LSTM, and Transformer for activity recognition using IMU and visual markers.”

– [3] NCBI. “Deep CNN-LSTM With Self-Attention Model for Human Activity Recognition.”

– [5] K-REx. “Transformer neural networks for human activity recognition.”

Further Reading

1. https://www.sciencedirect.com/science/article/abs/pii/S0952197623007133

2. (PDF) Accuracy Comparison of CNN, LSTM, and Transformer for Activity Recognition Using IMU and Visual Markers

3. Deep CNN-LSTM With Self-Attention Model for Human Activity Recognition Using Wearable Sensor – PMC

4. https://ieeexplore.ieee.org/iel7/6287639/10005208/10261772.pdf

5. Transformer neural networks for human activity recognition